This article is an installment of Future Explored, a weekly guide to world-changing technology. You can get stories like this one straight to your inbox every Thursday morning by subscribing here.

A new microchip has been developed by researchers at Stanford that can quickly and accurately run a variety of AI applications directly on a device.

“Having those calculations done on the chip instead of sending information to and from the cloud could enable faster, more secure, cheaper, and more scalable AI going into the future, and give more people access to AI power,” said co-senior author H.-S. Philip Wong.

The challenge

Most of today’s advanced AI systems do their processing “in the cloud” — if you ask a text-to-image AI to draw you a picture, for example, the request is sent to a data center for processing, and the result is sent back to your smartphone or computer via the internet.

This might take anywhere from 10 seconds to a few minutes, which is fine because you probably don’t need that image immediately. But what if the AI isn’t drawing pictures but monitoring your heart condition in real time, using data from a fitness wearable?

“If all that information took several seconds or a minute to get processed somewhere else, it’s useless.”

Arun Mirchandani

When you need results in as close to real time as possible, it’s better to have the AI do its processing directly on the device rather than sending data to the cloud — that approach is called “edge computing.”

“The real-time feedback loop required for things like remote monitoring of a patient’s heart and respiratory metrics is only possible with something like edge computing,” Arun Mirchandani, an advisor on healthcare digital transformation, who wasn’t involved in the study, told MIT Technology Review.

“If all that information took several seconds or a minute to get processed somewhere else, it’s useless,” he continued.

“The data movement issue is similar to spending eight hours in commute for a two-hour workday.”

Weier Wan

Aside from faster results, edge computing has the added benefit of increased privacy: If your health information never leaves your wearable, you don’t have to worry about someone else intercepting it — or interfering with it — en route.

So why do we run these apps in the cloud, instead of locally? The problem is that wireless devices have limited processing power and battery — to run a more advanced and energy-intensive AI program, you may have to turn to huge servers in the cloud.

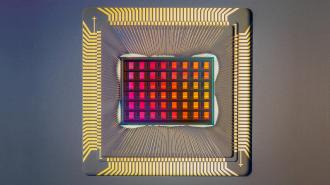

NeuRRAM

A Stanford-led team has now unveiled NeuRRAM, a new microchip that could let us run advanced AI programs directly on our devices.

It works by eliminating one of the biggest energy sucks in microchips designed for AI applications: the transfer of data between the chip’s compute unit (where processing happens) and its memory unit (where data is stored).

“The data movement issue is similar to spending eight hours in commute for a two-hour workday,” said lead researcher Weier Wan. “With our chip, we are showing a technology to tackle this challenge.”

Instead of doing its AI processing in a dedicated unit, NeuRRAM uses an emerging technology called “resistive random-access memory” (RRAM) to do its processing in the memory unit itself.

NeuRRAM is versatile, accurate, and twice as efficient as the best compute-in-memory devices.

This type of chip architecture is called “compute-in-memory” (CIM), and it’s been used in the past to make microchips more energy efficient. However, that efficiency has traditionally come at the expense of processing power, versatility, and accuracy.

By innovating their chip’s design, circuits, and more, the team overcame those limitations — NeuRRAM is twice as efficient as the best CIM devices, supports a variety of AI applications, and is as accurate as traditional chips at tasks such as letter recognition and image classification.

“Efficiency, versatility, and accuracy are all important aspects for broader adoption of the technology,” said Wan. “But to realize them all at once is not simple. Co-optimizing the full stack from hardware to software is the key.”

“Such full-stack co-design is made possible with an international team of researchers with diverse expertise,” added Wong.

Looking ahead

NeuRAMM is currently a proof-of-concept device, and the team now plans to further develop it to handle more complicated AI applications.

According to Wong, if the chips were mass produced, their low cost, small size, versatility, and energy efficiency could make them ideal for use in medical wearables — that could give the devices the ability to handle AI applications that are only possible with cloud computing today.

Healthcare is just one of many possible areas of disruption, too — NeuRRAM and other CIM chips have “almost unlimited potential,” according to Stanford’s press release.

One day, these cutting-edge microchips might make VR and AR headsets sleeker, with more capabilities and less latency, or give search-and-rescue drones the ability to analyze footage in real time without draining their batteries as quickly.

They might even be used to help space rovers autonomously explore other planets — no need to wait minutes or hours for instructions to arrive from Earth.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at tips@freethink.com.