There are diseases that massively harm your ability to communicate. Amyotrophic lateral sclerosis (ALS) is a debilitating, progressive disorder that can ultimately lead to locked-in syndrome — a patient will still have most of their cognitive abilities, like memory or recognizing faces, but suffer a near-total loss of voluntary motor functions. According to the ALS Association, there are approximately 5,000 new diagnoses of ALS in the US each year.

The treatments available work to some extent to treat certain symptoms, but they tend to become less effective over time. There are even experimental brain-computer interfaces that can let people bypass their frozen vocal cords and send brain signals directly to a computer to decode words or letters. But even at their best, these are a far cry from being able to speak.

As Duke University puts it, “Imagine listening to an audiobook at half-speed. That’s the best speech decoding rate currently available, which clocks in at about 78 words per minute. People, however, speak around 150 words per minute.”

A new study from Duraivel et al. and published in Nature Communications offers hope for a far better solution.

High-res and more specific

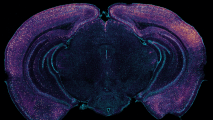

It is estimated that the human brain contains approximately 86 billion neurons. The failure of any single neuron won’t block speech or prevent you from moving your mouth, but each dysfunctional neuron will have its effect. After a while, you reach a point of critical mass – too many neurons aren’t holding up the circuit.

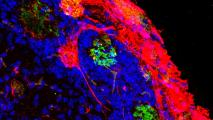

Disorders like ALS involve a complex interplay of neuronal dysfunction and degeneration. Neurons a hair’s width apart can have drastically different roles in coordinating speech patterns, and so it’s critical for the sensors measuring brain activity to be very precise. And this is why this new study was so impressive. The team managed to double the number of sensors on an electrode used in the treatment, from 128 to 256. This packs in a huge number of sensors on a device the size of a fingernail. Where previous sensors on previous electrodes were spaced from 4mm to 100mm apart, these new ones were spaced from 1.33mm to 1.72 mm.

All of which is to say the team had much higher spatial resolution and significantly improved signal-to-noise ratio when it comes to capturing the brain’s speech-related neural activities.

From sensors to speaking

Having improved sensors is one thing, but how does that help an ALS patient who cannot encode a phoneme, let alone a sentence? The idea is that the sensors will record the neurons in the brain that are intending to make a certain sound, even if the 100+ muscles in the lips, tongue, jaw, and larynx cannot actually produce it. So, before you make a /g/ sound, as in the word “gate,” your brain will activate certain neurons. In a healthy person, those neurons will trigger a domino effect to coordinate speech activity. In ALS, the dominoes don’t fall right.

Interestingly, what the study found was that for some sounds, like the /g/ sound, their brain decoder was right 84% of the time. But not all phonemes are equal, and some are harder to decode in this way than others. It is most difficult with similar phonemes such as /p/ and /b/. Overall, the device had a 40% success rate in decoding neurons into sound. Which is to say that for 40% of sounds, we can now work out what someone is trying to say from their brain alone.

The road to telepathy?

Forty percent doesn’t sound like a lot. It still means that for the majority of sounds, and the vast majority of potential sentences, we still cannot decode what a patient is trying to say from brain signals alone. But it is remarkable when you compare it to what we had before. Earlier brain-to-speech efforts could sometimes take days to fine-tune, calibrate, and function properly. As Duke University put it, “similar brain-to-speech technical feats require hours or days-worth of data to draw from. The speech decoding algorithm Duraivel used, however, was working with only 90 seconds of spoken data from the 15-minute test.”

As is often the case, we are still some ways away from a science fiction world of telepathic communication. Brain-to-speech systems still suffer from three major problems: they’re expensive, error-prone, and slow. What’s clear, though, is the trajectory. With a $2.4 million grant from the National Institutes of Health and revived and excited interest in the technology, we’re one step closer to brain communication than we were last year.

In many ways, this study and this technology were achievable only because of the rapid advancements in sensor technology. They could use twice as many sensors to be massively more specific in their study. If technology does exponentially increase, so will the medicines that depend on it.