Researchers at MIT have invented a headset that can hear words you say “in your head.” It isn’t quite mind reading, but it can detect silent “subvocalization”, like when you very slightly mouth words to yourself while reading. While they don’t make any noise and are undetectable to the naked eye, electrodes on the headset can pick up the faint neuromuscular twitches on your face and an algorithm translates them into words. The device, named “AlterEgo,” is meant to help internalize human-machine communication, without disrupting our interactions the way that talking to Siri or pulling out our phone does.

Solving the “Crazy or Bluetooth?” Problem: Remember when you first saw someone talking on a Bluetooth headset? You probably thought they were a little unhinged or talking to themselves. Today, wireless headphones are more ubiquitous than ever, but we have never quite gotten over that initial awkwardness. The advent of virtual assistants like Google and Alexa has made talking to our devices even more useful, but actually speaking to our smartphones is still uncomfortable. Voice recognition still struggles in loud places like bars or coffee shops, and, frankly, it still feels weird to be talking to no one in particular, especially in public. But thanks to MIT’s device, someday you’ll be able relay questions and commands directly to your device without making a sound.

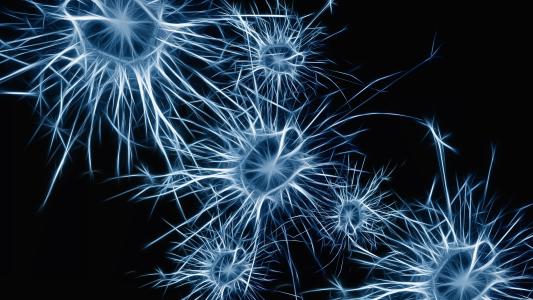

How It Works: The researchers first identified several locations on the face that give the clearest neuromuscular signals for internal vocalizations. They then built a prototype headset that places electrodes on the face, jaw, and mouth, and they started collecting data. They had subjects silently vocalize words for arithmetic and chess, and then they used machine-learning and neural networks to decode the muscle patterns for a set of words and tasks. After about an hour and a half of training with a specific user the translation was about 92% accurate and researchers say it should only get better with use.

Unplugging Your Ears: If you wear headphones in public, you know the drawbacks to typical headphone designs, which either cover your ears or plug them: they cut you off from the rest of the world. You might not hear a question or a warning, and even casual interactions like checking out or ordering coffee can be weird. That’s why the developers paired their technology with bone-conduction headphones. These leave your ear canal open to ambient noise and instead deliver sound vibrations through the jaw to the inner ear bones, letting you “hear” clearly with little audible noise. Combined with the silent-speech recognition system, AlterEgo claims to form a “complete silent-computing system that lets the user undetectably pose (questions) and receive answers.”

The Point: The lead designer says their goal was to build a more natural, seamless interface between computers and our own minds: “The motivation for this was to build an IA device — an intelligence-augmentation device. Our idea was: Could we have a computing platform that’s more internal, that melds human and machine in some ways and that feels like an internal extension of our own cognition?” A machine-mind-meld might be a long way off, but this radical new interface could fundamentally change how we interact with technology in our daily lives.