Every time I have started a new project over the last 30 years, pretty much the first person I go to for advice is tech guru Kevin Kelly. He is one of the most original thinkers I have ever met in a long career of talking to a lot of remarkable thinkers. I can honestly say that every time I talk with Kevin, I learn something that I had never thought about before.

Kevin (no one who personally knows him ever refers to him as Kelly) was the person who got me to San Francisco in the early days of the digital revolution to work with him and the other founders of WIRED. He was the magazine’s founding executive editor, and his instincts on the next big story made it a must-read global brand in that era and made WIRED Digital a groundbreaking pioneer of the early web. After reading my first attempt as a young journalist to look 25 years into the tech future, he sent a two-sentence email to hire me.

The man’s brain never stops churning and learning, so I keep going back to him as a mentor for insight into almost every iteration of the tech story, and in the last 10 years, many of our conversations have focused on artificial intelligence (AI). Whenever I launched a new event series or public-facing project, Kevin was one of the first guests I hosted to test the concept out.

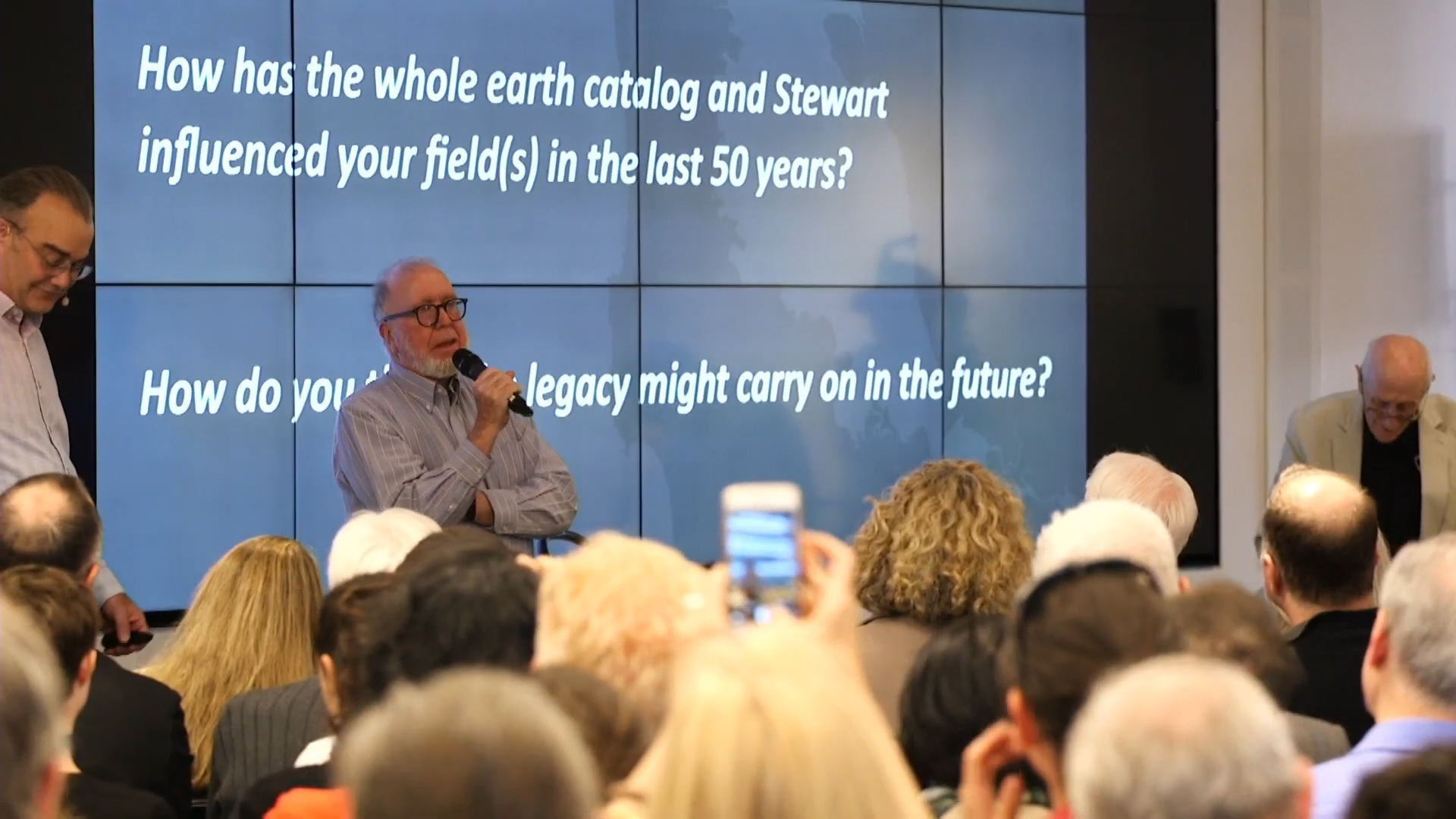

The images and videos in this essay capture Kevin in action. The video above shows him speaking at my series The AI Age Begins in 2023 — that series was trying to make sense of the surprise arrival of generative AI. Below you’ll find one from 11 years ago, when Kevin hosted one of the first virtual roundtables for my second company, which pioneered the interactive group video format that we now know as Zoom. The topic? Early AI.

When I kicked off a physical event series called What’s Now San Francisco in 2016, one of the first events provided the launch for Kevin’s book “The Inevitable: Understanding the 12 Technological Forces That Will Shape Our Future,” which is about the next 30 years in tech. Kevin, who never does anything the normal way, wanted to be able to ask the audience questions in addition to answering their questions for him, so we rented devices to poll the 200 tech innovators in attendance in real time. When I hosted an event to mark the 50th anniversary of The Whole Earth Catalog and honor the legendary Stewart Brand, who Kevin considers his mentor, as do I, Kevin was the first one to give a tribute.

So, when I launched this new series with Freethink, The Great Progression: 2025 to 2050, I knew my first interview had to be with Kevin Kelly. The idea of this series is to explore how we could harness AI and other transformative technologies that are now ready to scale to reinvent America and create a much better world in the next 25 years. We have the opportunity to do so because these new tools have incredible positive potential. What’s missing at this historic juncture is a new grand narrative about how we could actually pull this off. What’s the rough outline about the new kind of economy and society — if not civilization — that’s now within reach?

We need to clean the proverbial whiteboard, look around at the new technologies now available, take in all our new knowledge about how the world really works, and rough out a positive but plausible new way forward. That’s what this new series is going to attempt to do. We’re not going to create a prediction about what will happen in the years ahead, but more like a grand strategy about what could happen if we really wanted to make it happen.

Kevin is perfect for starting a project like this because he brings a beginner’s mind to everything he does. His boundless intellectual curiosity has given him a keen understanding of a wide range of technologies and fields. He has traveled on the ground across more square footage of the planet than anyone I know. He’s an indefatigable optimist who always tries to see the bright side of the future. I could go on.

In my two-hour interview with Kevin, he did not disappoint. He took the assignment seriously and laid out ways we should begin thinking about filling out the playbook for the next 25 years.

What follows are my distillations from the interview. I share what I believe are Kevin’s most relevant insights into this ambitious project, along with extended quotes from him. I have decided to go this route with my monthly interview with a key thought leader rather than make people wade through a two-hour transcript or listen to a two-hour recording. I’ll give you the best insights and Kevin’s best quotes, starting with this one:

I want to begin with talking about not AI, but AIs plural. I want to emphasize that very quickly I believe we will have particular and specific AIs, doing different things, and they will want to be regulated and managed and financed in different ways.

We can talk about the industrial age and industrialization, but you wind up talking about individual machines. There’s not this one machine. There’s not “machining.” There are all kinds of machines, from automobiles to factory assembly lines to appliances. So you can talk about industrialization in the broad terms, but you’re very quickly going to want to talk about specific and different machines doing different things and having different impacts.

I think we have to think about AI in the same way. We can talk about the AI-ization or what I call the “cognification” of things, but just keep in mind that it’s not a uniform singular, monolithic force.

Taking aim at a positive but plausible scenario we want to be real by 2050

Kevin then started talking about the world of scenarios, a world that I know well, too. I worked closely with the aforementioned Stewart Brand for four years at a strategic foresight company he cofounded called Global Business Network (GBN), which was one of the premier scenario-planning firms in the world 25 years ago.

Stewart had cultivated a network of what we called “remarkable people” who were drawn into scenario projects for clients — those ranged from top corporations attempting to figure out the early days of globalization to government agencies like DARPA looking decades out to Steven Spielberg trying to understand what Washington DC would look like in 2050 for the movie Minority Report. Kevin was one of those remarkable people and was involved in many scenario projects.

The classic scenario project starts from the position that the future is highly uncertain and that you need to understand a range of at least four possible scenarios. You then develop robust strategies that could adapt to all of them. There is a subset of projects that focus on creating a positive scenario that is plausible but also desirable — you can then plan your strategy to help bring that outcome about.

Kevin believes that AI is actually going to increase uncertainty in the coming years (his main point of the video in this article’s introduction), and he recently spent some time looking at the implications of two critical uncertainties:

- Will AI become centralized in the hands of a few or be radically decentralized with lots of little AIs controlled by myriad players?

- Will AI be used to mostly augment human workers or mostly work independently to potentially replace workers?

The outcome of the interplay of just those two critical uncertainties would create very different worlds.

The positive scenario that Kevin clearly wants to see happen would be the one where AI is decentralized and used to augment humans. So, given that a goal of The Great Procession: 2025 to 2050 is to consider how we could bring about a desirable world, he wanted to focus on thinking through that scenario:

That decentralized world I think is great for robots. I think robots proliferate in this vision because they can contain enough various adaptability and intelligence to work and don’t need to be tethered, so to speak, to a big company.

The other thing about this version is that you could have small data AIs — AIs that don’t require 7 billion parameters. The monopoly of the big companies who own all the data becomes less important because you have a lot more startups. You have a lot more people who can make a little AI doing something.

And I think that culturally it’s great, because it’s more like an internet culture. It’s permissionless. It’s good for solo entrepreneurs.

Kevin then did what I previously mentioned he does in every conversation I have with him. He told me something I had never thought about:

I think we’re going to very quickly program in emotions for robots. For some people this will be the scariest thing, beyond even their intelligence. It’ll be the fact that robots will be highly emotional and people will have attachments to them.

Like anything else, I think in some cases robots with emotions will be really good. It’s good in the sense that emotions are one of the best human interfaces. If you want to interface with us humans, we respond to emotions, and so having an emotional component in robots is a very smart, powerful way to help us work with them.

And there are other things like pain. The machines, the robots, will feel pain. We’ll program them to feel pain because pain is a very useful thing. If you injure a part of your machine, the pain signal will prevent your robot from injuring itself further, from damaging it further, and so that’s a very utilitarian thing. That’s why we humans have pain.

How much tech-driven change can actually happen in 25 years?

The 25-year timeframe that defines The Great Progression: 2025 to 2050 seems long enough to change the world in fundamental ways, but the impact of new technologies over time is tricky to project. As the saying goes, never confuse a clear view for a short distance.

Case in point is the video that starts this section, which my team and I made 11 years ago. It is a 10-minute edited recap of a 90-minute virtual roundtable on the future of AI that I hosted and that Kevin anchored with a half dozen experts. The video is a fascinating look at the conversation in the very early days of the shift to what we now know as neural networks and large language models (LLMs).

The nascent AI that we talked about in that conversation has come a long way in 11 years, but there is an immense scaling that now has to occur to change the world in fundamental ways.

Kevin said he does not believe in The Singularity, the idea that AI will, at some point soon, perhaps in the 2030s, become more intelligent than humans and will then be able to act independently and program itself to become exponentially smarter. Proponents of The Singularity, such as Ray Kurzweil, argue that we can’t fathom the rate of change or possible outcomes beyond that point because all that will be beyond our control.

Kevin argues almost the opposite, which was somewhat surprising to me. He thinks the transition to a world fully empowered by AI will take much longer than most techno-optimists predict, including me:

I think there is an AI frontier where we run very fast with invention and innovation, but that the adoption, the penetration of AI, is actually going to be much slower than the frontier.

I think it is just going to take longer than people think to absorb the changes, partly because of the uncertainty factor of what comes next, but also because it is such a big thing that you have to rearrange the other things we have in our lives in order to use it, to maximize it, to exploit it. I just think that it’s going to take quite a bit longer for it to penetrate to the point where it’s permanent.

What I’m saying is that the frontier models, the actual innovation is there, but I think the osmosis of absorbing all that and implementing it is so big, it requires reorganizing a lot related to the work process, the workflow, the way in which we work.

Kevin gave the example of driverless vehicles to illustrate his point. We do have driverless Waymo taxis already operating 24/7 in San Francisco and Phoenix, and they are now expanding to LA and Austin. But transitioning the bulk of transportation into this mode will require a fundamental reworking of many parts of the legacy system, including infrastructure like traffic lights and parking spaces. Could that be done within 25 years? Will it be done?

He speculated that long-haul trucking over the interstate highway system would probably be automated by then, but that human drivers will still be needed to finesse the 5% of driving in cities that might be most complicated. Rather than having one human for one truck, though, he thinks it’ll be more like one human per truck caravan.

Legacy systems and legacy organizations of any kind are going to have difficulty evolving into the AI age, according to Kevin. Big global corporations may have the resources to throw at the AI transition, but that might not be enough to overcome internal obstacles:

Big established corporations are going to have great difficulty adjusting to AI, and the change may come just by the advent of new companies being organized around AI because it’s so hard to change big old companies — there’s just so much inertia.

So it may take a generation, at least a decade or more, for a new crop of companies that are AI-first to pioneer the new structure and proliferate.

These new AI-first startups might grow into the next generation of dominant tech players, superseding the current tech titans, like Google and Amazon, which themselves superseded the previous generation of IBM and the like. In the process, they’ll pioneer new kinds of jobs:

I’m very confident that we will create millions of new jobs that don’t exist right now, that are facilitated and enabled by having this AI power. It’s my lack of imagination that I just can’t rattle them off, but there will be jobs that will use these AIs, these thinking machines, in some capacity to do new work.

What are some of the other new jobs that an AI would enable? It’s super clear to me that working with AI is a skill, and there’s no doubt that AI whisperers and people who are really good at it will be the equivalent of a programmer today.

Someone who’s really, really good at talking to AIs and can get them to do things that ordinary people can’t get them to do will be valuable. Already that’s happening. Already we see some people who can get these AIs to draw pictures or make images that I have no idea how they’re doing that.

So far, everybody that I know using AI seems to think it works best in partnership with people. AI image generators are very creative, they’re very detail-oriented, and they can be surprising, but the AIs don’t have a good sense of what it is that we humans actually are looking for — and that’s the human side of it.

So, will that go away? I don’t know, but all I can say is so far there isn’t any evidence of that.

Kevin applied roughly the same analysis to the legacy system of education that he did to other legacy systems: it will struggle to adapt to AI. That said, he thinks education in the broadest sense — learning in general — is poised to be one of the first fields to become transformed within the 25 year timeframe:

I contend that most students today are learning more from YouTube than from school. It’s pretty easy to imagine better online learning tools in YouTube with AI, so we could see a shift where more and more learning happens outside the formal process.

Can the formal process, the formal educational institutions, adapt fast enough to AI? I don’t know. They haven’t adapted very fast in the past. But what I’m suggesting is that this doesn’t matter because the learning’s going to happen outside of the school.

All these AIs are knowledge-based. They’re know-it-alls. They’ve read everything. They know everything, so there may be a diminished need for you or me to know as much as we needed to learn in school. But there’s some amount of learning that people will need to start with in order to go learn something else and then innovate. But what is that minimum? Maybe we’ll know in 25 years.

The rule of thumb about what technologies beyond AI to focus on

The image above was taken at the previously mentioned 2016 event where Kevin polled a gathering of 200 tech innovators about what they expected to see in the future. The chart is their response to the question of when they expect AI to be able to pass the bar exam for lawyers. The majority said by 2027, and we now know that the current crop of generative AIs could do it by 2024 — three years ahead of that prediction.

So, what else in the world of tech should we be looking at today, given its potential to change the world for the better in the next 25 years? Quantum computing? Fusion energy? What other wonders could AI help bring about?

“My hypothesis is that if we’re looking out 25 years, then if something is not being demonstrated in the lab today, it’s probably not going to be widespread in 25 years,” Kevin told me. “Maybe AI can accelerate this process a little bit, but not by much.”

Kevin was bullish on bioengineering and how our mastery of biology could make a big difference in the next 25 years. Though he is not an expert in this field, he did attend the exclusive “Spirit of Asilomar and the Future of Biotechnology” summit in February 2025, which marked the 50th anniversary of the original 1975 Asilomar Conference on Recombinant DNA:

They were talking about pan-virus vaccines — vaccines that would work on any virus, like colds. And I thought it was kind of science fiction, but they were pretty serious that pan-virus vaccines were plausible and feasible in about 25 years.

They talked about vaccines for everything. The mRNA vaccines, they were saying, were really revolutionary and very powerful, and we could start making vaccines for everything. A vaccine for cancer seemed totally plausible for them.

Kevin also took the clean-energy revolution as a given since we’ll need much, much more electricity to power all the AI and electric transport heading our way. In addition to seeing the adoption of next generation small nuclear reactors, we’ll also see renewable energy technologies start to really scale — these are so far along in development that all that’s left to do is build as much as we can as quickly as we can:

The big story is that solar becomes so cheap that you use it for fencing. You put it vertical. You put it on any flat surface. The idea that everything to do with energy is being locally generated and managed is profound. And that’s especially profound for the developing world because now you will have much more reliable, constant, cheap energy.

We ended the conversation where we started it, talking about the idea of a decentralized world of abundant cheap energy powering a proliferation of decentralized AI. That’s a world that Kevin would like to live in, as would I. That’s a world that we can at least consider shooting for right from the start.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].