Late Thursday night, Elon Musk filed a lawsuit against OpenAI, claiming the company and its leadership breached the firm’s “founding agreement” that stipulated OpenAI would develop artificial general intelligence for the benefit of all humanity. The lawsuit, first reported by Courthouse News, alleges that OpenAI’s for-profit model, partnership with Microsoft, and the March 2023 release of the GPT-4 model “set the founding agreement aflame” because the GPT-4 model has already reached the threshold for artificial general intelligence (AGI).

Musk, along with Sam Altman, helped co-found OpenAI in 2015, partly as a response to Google’s acquisition of AI research lab DeepMind. Musk’s lawsuit now alleges that OpenAI “breached a contract” they made with him at the time of the company’s founding, citing a 2015 email to Musk from Altman as evidence. “To the extent Musk is claiming that the single e-mail in Exhibit 2 is the ‘contract,’ he will fall well short,” Boston College law professor Brian Quinn told Reuters.

Musk stepped down from OpenAI’s board in 2018 after failing to convince Altman and other founders to let him take control of the organization. This represents another challenge for the lawsuit, Quinn told the New York Times: “If he were a member of the board of directors, I would say, ‘Ooh, strong case.’ If this was filed by the Delaware secretary of state, I would say, ‘Ooh they’re in trouble.’ But he doesn’t have standing. He doesn’t have a case.”

Since leaving OpenAI, Musk has been a vocal critic of the company’s dealings with Microsoft, publicly chiding the company for shifting away from its original framework as an open-source nonprofit organization to a company pursuing an aggressive for-profit model.

The lawsuit takes aim at the company’s partnership with Microsoft. It seeks to force OpenAI to follow its “longstanding practice of making AI research and technology developed at OpenAI available to the public,” by which it means to open-source the company’s software and models. This part of the lawsuit runs into the fact that OpenAI’s partnership with Microsoft is specifically for the company’s pre-AGI technology.

The suit alleges that OpenAI’s new board, which was instituted last year after the previous board fired (then rehired) CEO Sam Altman, lacks “substantial AI expertise and, on information and belief, are ill-equipped by design to make an independent determination of whether and when OpenAI has attained AGI—and hence when it has developed an algorithm that is outside the scope of Microsoft’s license.”

Even if that’s true, Musk’s further claim that GPT-4 has already reached the level of AGI is a contentious one, to say the least. OpenAI’s charter defines AGI as “highly autonomous systems that outperform humans at most economically valuable work.” It’s a definition that leaves much room for interpretation. In his lawsuit, Musk points to research from OpenAI’s GPT-4 Technical Report that shows GPT-4 outperforms humans on a wide array of tasks, including scoring in the 90th percentile on the Uniform Bar Exam and in the 99th percentile on the GRE Verbal Assessment. However, performing well on structured exams is not, in itself, an indication of AGI, and the model performing well on some exams while performing poorly on others is certainly below OpenAI’s self-imposed definition.

The lawsuit also cites a paper by Microsoft researchers, called “Sparks of Artificial General Intelligence,” that claimed, “Given the breadth and depth of GPT-4’s capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system.”

When the “Sparks” paper was released, computer scientist Gary Marcus criticized the notion that GPT-4 attained a form of general intelligence. “The paper’s core claim … literally cannot be tested with serious scrutiny, because the scientific community has no access to the training data.”

Computer scientist Michael Wooldridge, author of A Brief History of Artificial Intelligence, isn’t even sure if LLMs constitute “true AI,” let alone true AGI. In an article published on Freethink’s sister publication Big Think, Wooldridge argues:

“…it seems unlikely to me that LLM technology alone will provide a route to ‘true AI.’ LLMs are rather strange, disembodied entities. They don’t exist in our world in any real sense and aren’t aware of it. If you leave an LLM mid-conversation, and go on holiday for a week, it won’t wonder where you are. It isn’t aware of the passing of time or indeed aware of anything at all. It’s a computer program that is literally not doing anything until you type a prompt, and then simply computing a response to that prompt, at which point it again goes back to not doing anything. Their encyclopedic knowledge of the world, such as it is, is frozen at the point they were trained. They don’t know of anything after that.”

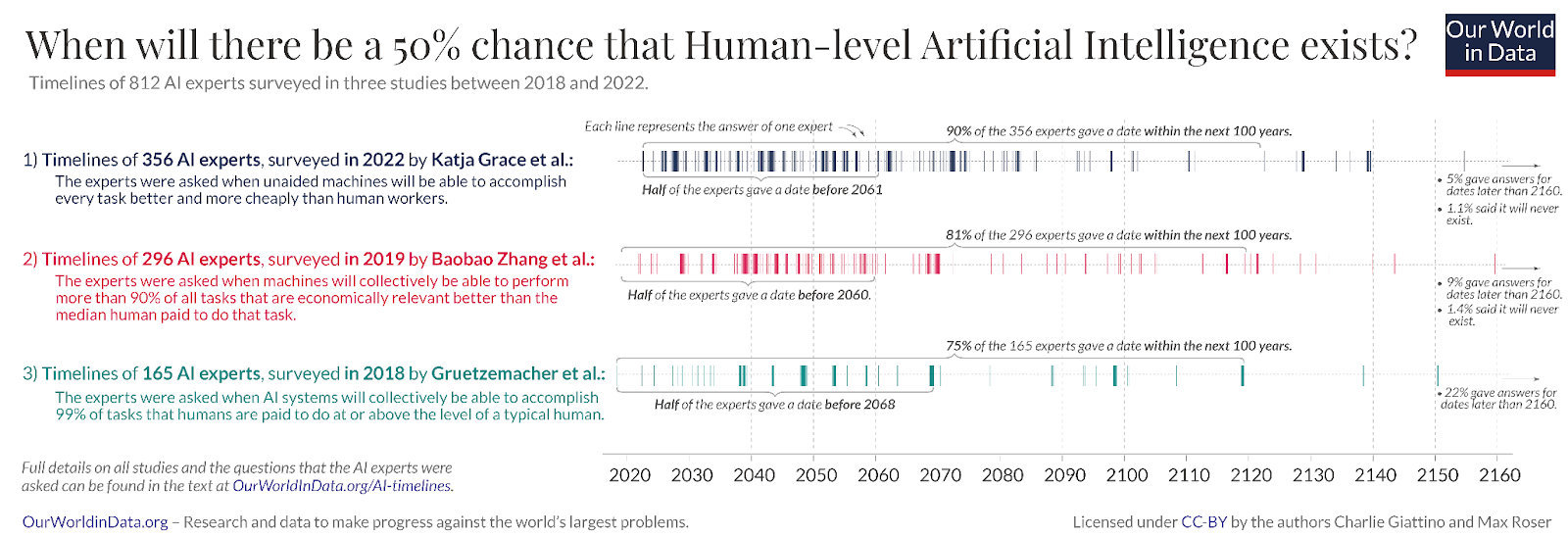

There has yet to be a consensus definition of artificial general intelligence in the field of AI research. That’s one reason why experts have widely differing views on when they think AGI, or at least “human-level artificial intelligence,” will arrive — if it ever does. Our World In Data has collated results from three studies by researchers who surveyed AI experts about their AGI timelines.

These three studies, conducted prior to the release of GPT-4, posit simplified conceptions of AGI that can be used as working definitions:

- Accomplish every task better and more cheaply than human workers.

- Perform more than 90% of all tasks that are economically relevant better than the median human.

- Accomplish 99% of tasks that humans are paid to do at or above the level of a typical human.

Even though those definitions sound similar, they are all quite different, and it’s not clear that this is the best way to classify AI. In November 2023, Google’s DeepMind released a paper that attempted to better classify the capabilities and behaviors that constitute artificial general intelligence. The DeepMind framework broke down AI performance into different levels:

- Level 1: Emerging – equal to or somewhat better than an unskilled human

- Level 2: Competent – at least 50th percentile of skilled adults

- Level 3: Expert – at least 90th percentile of skilled adults

- Level 4: Virtuoso – at least 99th percentile of skilled adults

- Level 5: Superhuman – outperforms 100% of humans

The paper also distinguished between narrow and general capabilities. Narrow was defined as a “clearly scoped task or set of tasks,” while general was defined as a “wide range of non-physical tasks, including metacognitive abilities like learning new skills.” The DeepMind paper classified the major LLMs — including ChatGPT, Bard, Llama 2, and Gemini — as Level 1: Emerging AGIs. The paper claimed that no AI model, in a general sense, performed higher than Level 1: Emerging.

Musk’s lawsuit ultimately asks for “a judicial determination that GPT-4 constitutes Artificial General Intelligence and is thereby outside the scope of OpenAI’s license to Microsoft.” A jury would determine that — if the court allows it and if Musk has his way. It’s not yet clear how a court, or a jury for that matter, would be able to determine if any model meets the threshold of AGI, which is a loosely defined term at best. “We’ve seen all sorts of ridiculous definitions come out of court decisions in the US,” ethics professor Catherine Flick told the New Scientist, “Would it convince anyone apart from the most out-there AGI adherents? Not at all.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].