Just four months after releasing ChatGPT, AI research firm OpenAI has already upgraded its hugely popular chatbot — and this version is smarter, safer, and capable of sight.

The background: In November 2022, OpenAI released ChatGPT, an AI chatbot that can respond to written prompts with conversational text, computer code, and more. By February, it was the fastest-growing app in history, with more than 100 million monthly users.

Despite its popularity, ChatGPT has demonstrated significant room for improvement, with one of its biggest flaws being a tendency to “hallucinate” — generate text that sounds factual but isn’t.

Getting past filters designed to prevent the AI from writing disallowed content, such as text promoting violence or illegal activity, has also proven relatively easy, and because ChatGPT was trained on essentially the entire internet, its output sometimes includes biases exhibited by internet users.

The latest: ChatGPT was built on the AI language model called GPT-3.5, and OpenAI has now announced the next advance in the technology: GPT-4.

One of the biggest advances between this model and its predecessor is its ability to respond to text and image prompts — you could ask it to identify the artist behind a painting, explain the meaning of a meme, or generate captions for photographs.

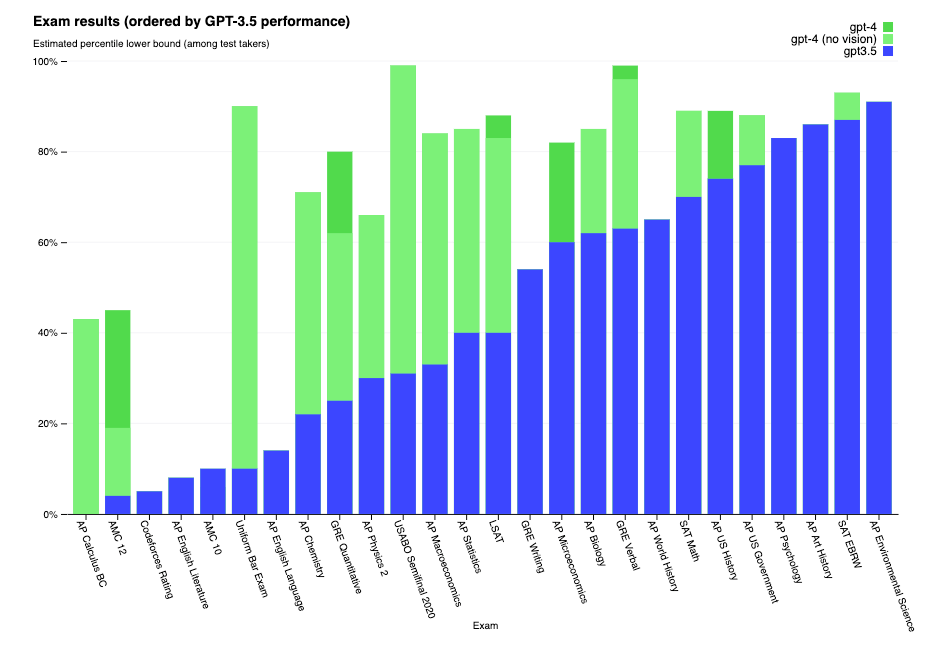

The system is also “smarter” than GPT-3.5, outscoring the ChatGPT model on a slew of exams, including the SAT, the LSAT, and the Bar.

Perhaps even more importantly, though, while GPT-4 doesn’t eliminate the problems with GPT-3.5, it does minimize some of them — according to OpenAI, the model is 40% more likely to generate factual content and 82% less likely to respond to disallowed prompts.

CEO Sam Altman has also noted that the new model is “less biased,” though it’s not clear to what extent or how they measured based on the available information.

GPT-4 in the wild: OpenAI has yet to make GPT-4’s image input capability available to the public, but the rest of the model is now live in ChatGPT Plus, the subscription version of the chatbot that costs $20 per month (the free version of ChatGPT is still running on GPT-3.5).

Some people might have already used the next-gen AI for free without even realizing it, though. Microsoft — which has invested $13 billion in OpenAI — has incorporated GPT-4 into its new Bing search engine.

“If you’ve used the new Bing preview at any time in the last five weeks, you’ve already experienced an early version of this powerful model,” wrote Yusuf Mehdi, Microsoft’s corporate VP and consumer CMO, on the day of GPT-4’s launch.

“In the short time we’ve had access, we have seen unparalleled performance.”

Michael Buckley

Other companies given early access to GPT-4 include educational nonprofit Khan Academy, which is using it to create a virtual tutor for students, and Denmark’s Be My Eyes, which has used it to develop an AI assistant to analyze photographs for people with visual impairments.

“In the short time we’ve had access, we have seen unparalleled performance to any image-to-text object recognition tool out there,” said Michael Buckley, CEO of Be My Eyes. “The implications for global accessibility are profound.”

Looking ahead: OpenAI stresses in blog posts, on Twitter, and in interviews that GPT-4 is still flawed, but it appears to be making significant progress at a rapid pace and is already exploring ways to incorporate audio, video, and other inputs into future versions.

“We look forward to GPT-4 becoming a valuable tool in improving people’s lives by powering many applications,” the company writes. “There’s still a lot of work to do, and we look forward to improving this model through the collective efforts of the community building on top of, exploring, and contributing to the model.”

Update, 8/9/23, 3:50 pm ET: This article was updated to correct the name of OpenAI’s CEO.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].