OpenAI CEO Sam Altman said in January that his company believes 2025 may be the year “the first AI agents ‘join the workforce’ and materially change the output of companies.”

In a recent interview with Axios, Dario Amodei — current CEO of AI startup Anthropic and former VP of research at OpenAI — made a bold prediction for how that AI integration could affect the people already in the workforce: In the next one to five years, AI could eliminate 50% of entry-level white-collar jobs and spike unemployment 10–20%.

Tech CEOs aren’t the only ones predicting that AI’s impact on the economy will be huge. Investors, financial institutions, and macro forecasters have all converged on the same basic storyline: AI’s impact is going to be big, it’s going to happen fast, and it’s going to be disruptive.

But how will the disruption play out?

Charting the disruption

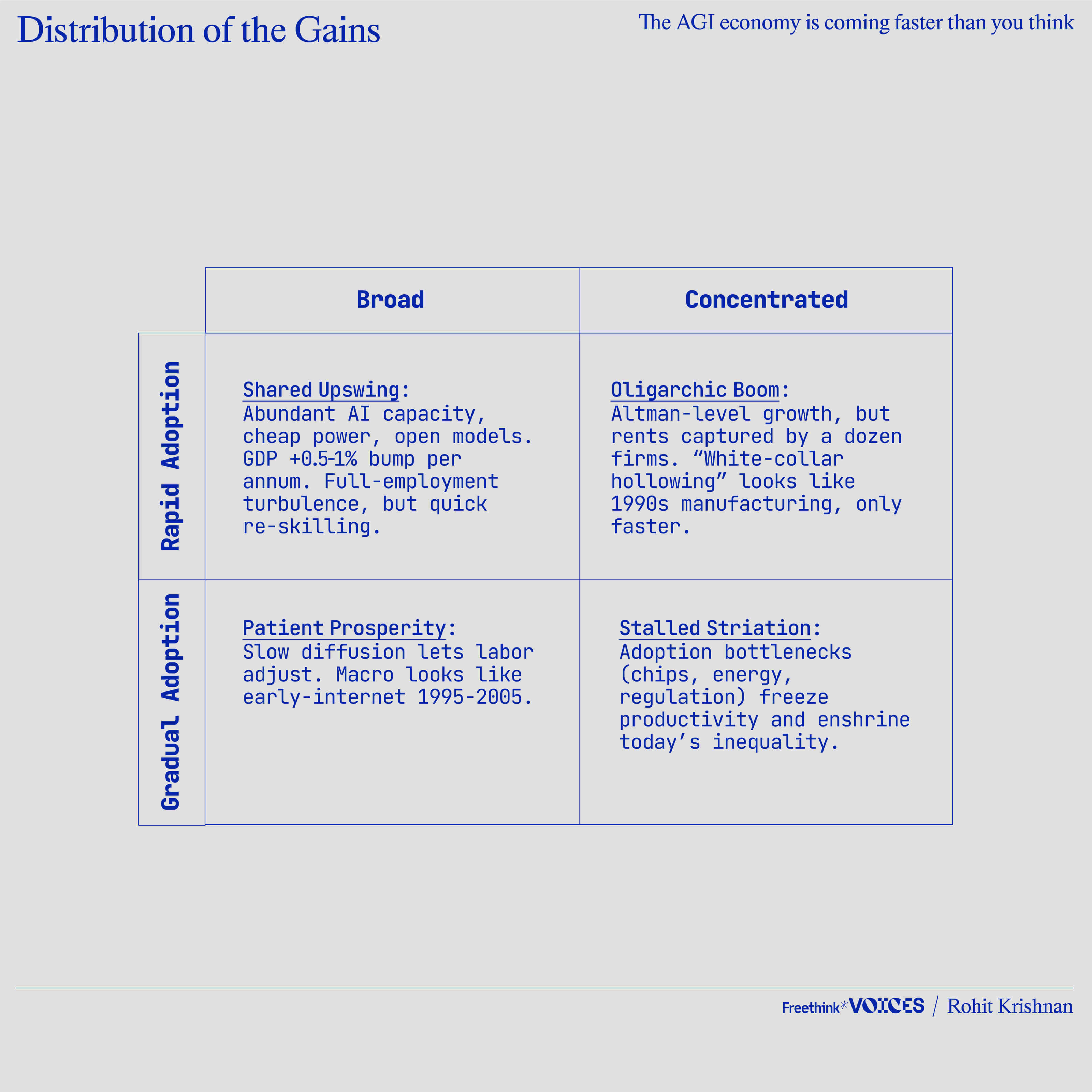

We likely have four options, as shown in the table below. The horizontal axis represents how the gains from AI will be distributed: broadly or among a concentrated group. The vertical axis represents the speed of AI adoption: rapid or gradual.

Capital, labor markets, and policy all point us toward the rapid row:

- Capital: Nvidia’s latest quarterly revenue cleared $148 billion, up 86% year‑on‑year, and there is still a waiting list for high‑end H100 boards. A single frontier model training run already costs roughly $500 million in hardware and power. The Big Four cloud computing companies plus Meta poured roughly $200 billion into AI/data center capex in 2024 alone.

- Labor: The leading models solve bar exams, draft contracts, and write passable code. Employers are testing replacements for workers, not just systems to augment them. Amodei’s forecasts sound aggressive, but early deployment studies are directionally supportive. Standardized tests at big consulting firms show productivity gains in the 30–40% range when LLM copilots are switched on.

- Policy: Brussels is writing rules for how AI can be used at work, but the European Union’s AI Act focuses on transparency and liability, not moratoria. In the US, the Trump Administration rescinded all previous AI policies in January 2025. China is accelerating its domestic accelerator stack to get around export bans. Everywhere, regulators want a slice of the upside and are wary of pushing firms offshore. That is a permissive environment by historical standards.

Put those pieces together, and you get rapid adoption. We’re looking at one to five years, not one to five decades.

The live question, then, is whether we land in the left or right column — broad or concentrated distribution of the gains — and the answer depends on three levers: compute concentration, energy supply, and the emergence of new “meta-work” that keeps humans complementary to AIs.

A grounded scenario for 2030

Suppose AI adds one percentage point to global productivity annually starting in 2027, as Goldman Sachs projects. Compound that and the world is ~6% richer by 2030. Call it a $6 trillion bump.

At the same time, entry‑level white‑collar employment shrinks by perhaps 30%. Some of the displaced exit through retirement. Others get absorbed into the new meta-work or traditional services. In the US, unemployment might peak in the high single digits among actual labor market participants. In parts of continental Europe, it hits the low teens. Wage dispersion might even widen: The incomes of earners in the 90th percentile increase by 15%, the median stays flat, and the bottom quartile decreases.

In such a scenario, international trade will reshuffle. Energy- and chip‑rich nations (e.g., the US, Taiwan, and the Gulf states) run surpluses in the sector. Traditional manufacturing exporters, such as Vietnam, see some economic losses as traditional importers start using automation and robotics to make on-shore manufacturing more cost effective. Service exporters (e.g., India, the Philippines, and Anglophone Africa) do well if they can rent the models cheaply and sell AI‑enhanced tasks abroad.

Meanwhile, inflation comes in two parts. Digital cognition falls toward zero marginal cost, but electricity, land near data centers, and the rare metals in GPUs are still costly. The agglomeration effects of wanting to live where the other geniuses live means cities continue to be the center of economic activity. The Consumer Price Index thus might tell a muddled story.

Left to itself, that bundle of facts lands us in the Oligarchic Boom quadrant of our table: rapid gains, captured by a few, with a political backlash brewing.

What drives the split between broad and concentrated gains?

Compute concentration is the first determinant.

Training budgets scale super‑linearly with capability: more capable AIs cost even more to train than their predecessors. There was a 2,000-fold jump in spend in the five years between GPT-2 to GPT-4, while capability went from “writes a coherent paragraph” to “aces college exams.” And it hasn’t stopped. That’s why OpenAI is spending tens of billions on Stargate. Unless open source keeps pace, the new “rail barons” will be whoever can afford to spend billions training AI. Talent follows the clusters. Meta is already dangling eight-figure compensation to senior AI engineers, while rescinding offers to entry‑level hires. Left unchecked, this dynamic pushes us straight toward Oligarchic Boom.

Energy is the second.

The International Energy Agency expects electricity demand for data centers to more than double to ~945 TWh — the current usage of Japan — by 2030, with AI largely driving the increase. Regions with a cheap supply of clean energy (e.g., Texas for wind, Québec for hydro, the Gulf Coast for nuclear) attract the clusters and run persistent current account surpluses. If electric grid expansion falls behind, adoption slows and the spoils remain locked in a few power‑rich enclaves, leading to Stalled Striation.

Human complements come last, and they are more malleable.

AGI still needs data, goals, and real‑world priors it cannot infer from pixels. If AGI eats cognitive tasks, what valuable human tasks are left? The answer is meta‑work: feeding, steering, and adjudicating the models. The right analogy is not the assembly line to service work, but agriculture to software: entire categories of work that existed in service to other lines of work will become industries in their own right once the cost curve changes.

That creates fresh roles. We could see model shepherds who curate synthetic fraud datasets for insurers, prompt‑pack designers in Nairobi who fine‑tune Midjourney outputs, or historians in California labs who flag hallucinated citations. None of these roles are glamorous, but they are all essential, and what they mostly resemble are the proto‑IT departments of 1965: clunky and over‑specialized, but seeds of much larger sectors. If education systems and gig platforms can scale these complements quickly, gains can diffuse more broadly.

Nudging the outcome toward Shared Upswing

Economist Tyler Cowen frequently emphasizes marginal thinking, asking questions like: “What institutional reforms are on the margin of being possible?” In terms of the impact of AI on the economy, three candidates could qualify:

- Diffuse ownership of the capital stock. Equity sharing, data‑dividend trusts, or sovereign wealth stakes in frontier labs can spread the excess profits from compute. It is not socialism — it is railroads and telephony redux. If California can mandate film residuals, it can require model residuals.

- Speed‑focused re‑skilling. A compute excise tax of even 0.5% on AI companies could fund lifelong learning accounts for every worker in a G‑7 economy — transforming job destruction into task rebundling. Should it be done is unclear, but it’s why Altman has been discussing universal basic income from the largesse of the AI companies’ outputs.

- Energy‑capacity pacts. Match every new hyperscale data center permit to an equivalent amount of low‑carbon energy: wind, solar, small modular nuclear, whatever clears. That prevents AI growth from running into a power wall and keeps clusters geographically diversified.

Challenges could arise. If we get 4% real interest rates or more, it might neuter speculative capex, meaning no new investments in AI infrastructure or reskilling initiatives. A few high‑profile failures in AI might well provoke EU‑style brakes. Open-source efforts might fall a full generation behind closed labs — all while compute cost soars.

None of the steps above would be costless, either, but all three are politically plausible and would move the needle from Oligarchic Boom toward Shared Upswing.

The bottom line

Skeptics may ask: “If AGI does all cognitive tasks, what is left for humans?”

Historically, when the cost of an input collapses, new downstream goods appear. Electricity, for example, gave us aluminium smelting and MRI scanners. Cheap cognition can give us personalized tutors, automated drug design, neighborhood‑scale business intelligence, and yes, whole markets for evaluating and steering AI itself. These are not sci‑fi fantasies — venture capital is already funding them.

The uncomfortable truth is that many traditional jobs will be replaced by AI — but the emerging truth is that economically valuable complements are already visible. They need scale, norms, and capital, not magic. Whether they arrive in time to prevent the widespread unemployment that Amodei predicts is a management and policy challenge, not a technological one.

Whether you believe Amodei or not, his warnings should not be taken lightly. If what he and many others are saying is even somewhat true, the best-case scenario is an extraordinary disruption to the way we work — the kind that normally takes decades to unfold — over the next few years. Entirely new classes of jobs must emerge because the alternative is widespread unemployment and economic upheaval.

Ultimately, we seem to be headed toward a future where we spend an extraordinary chunk of our economy on data centers and AI infrastructure. That investment will then make space for new segments to flourish, but whether that means current industries will become more productive or entirely new sectors will emerge and quickly grow remains to be seen.

The correct response is not panic or complacency, but institutional hustle.

The economic die is not fully cast, but its weight is now measurable.

Rapid adoption looks close to inevitable, but the distribution of gains is still very much to be determined. If institutions manage to spread ownership, train complements, and build the necessary electric grid, 2030 could mark the start of a Shared Upswing: a messy but overall positive rerun of the 1990s internet boom with even larger numbers. If they fail, expect an Oligarchic Boom, which will create fertile ground for grievance politics and regulatory whiplash.

Either way, Amodei’s and Altman’s timelines are too short to ignore, and 27% of jobs are highly automatable. If 50% are automated — as Amodei warns they will be — they’ll be replaced by fractional gigs mediated by AI platforms instead of payroll departments. Skilled professionals who master AI toolchains could see wage increases. Incomes will go down for the rest.

The correct response is not panic or complacency, but institutional hustle.

In 1997, WIRED’s “Long Boom” envisioned 25 years of prosperity from PCs and networks, but even that bold forecast is a long shout from the double-digit GDP growth and capturing of “the light cone for all future value in the universe” that some now predict will follow in the wake of AI.

As we navigate this transition, we should remember two things. First, every wave of automation has created more work than it destroyed, but only after a painful transition period. Second, the more widely we spread the new tools, the greater the likelihood that happy recursion continues. That is as close to an iron law as economics ever gives us.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].