This article was reprinted with permission of Big Think, where it was originally published.

We land on time at Madrid-Barajas airport, but there’s a delay disembarking. Turns out a new artificial intelligence system operates the jet bridge here. No humans needed — until they are. Through the window, I see the AI jet bridge repeatedly approaching the plane, flailing gently in the morning air, and withdrawing, again and again, caught in an infinite loop. Eventually, we’re rescued by a worker, who instantly sees and solves the problem.

Humans don’t get stuck endlessly repeating the same behavior. AI sometimes does, whether it’s stalled on the tarmac or locked in the purgatory of an automated call center. These examples might seem like simple failures of intelligence, but I think they point to something deeper: a fundamental limitation that may forever leave even the smartest AI vulnerable to infinite loops, never noticing the problem, never escaping.

Imagine a smarter jet bridge — a system that can monitor its performance and detect when it’s flailing and failing. Thanks to this internal model of its environment and its own behavior, it succeeds where today’s autonomous jet bridges fall short. Eventually, though, it will glitch too. So you add another higher-order feedback loop — a model within its model, monitoring its own monitoring. If that fails? Add another. And so on, with each new level of recursion adding intelligence of a kind, and with it, more robustness. But there’s always the chance of yet another glitch, an error in the self-self-self-monitoring system. Unless you build an infinite stack of recursive self-checking, the system would eventually spiral into an infinite loop, never meeting the conditions that allow it to stop, and everything would fall apart.

Computer scientists have wrestled with this problem for nearly a century. In 1936, Alan Turing proved that no algorithm can always determine whether another algorithm, given some input, will stop or run forever. Here’s an example to capture the intuition. Imagine you’re writing code telling a robot what to do. Some programs are simple — the robot completes the task, and the algorithm ends. But others are complex, with loops and conditions: “Keep moving until you see a red object.” If the robot never sees a red object, or if the logic is flawed, it may follow its instructions endlessly. And no matter how advanced your code, there might be no way to predict whether it will ever stop.

Turing’s insight was abstract, but the limits of algorithms became more concrete in the so-called “frame problem.” Laid out by John McCarthy and Patrick J. Hayes in 1969, the frame problem broadly refers to the difficulty of teaching a machine to make smart decisions based on relevant information without having it explicitly consider every irrelevant detail. Because the list of irrelevant information in a given situation can be endless, it’s difficult for machines to know what not to take into account. And this opens the door for yet another kind of infinite loop.

These problems marked milestones in thinking about intelligence — both natural and artificial — but they’ve largely faded into the background as new waves of deep neural networks and generative models have swept through society. This doesn’t mean they’ve disappeared. As the jet bridge in Madrid illustrates, when the silicon meets the tarmac, there’s still plenty of room for things to go wrong. The reason AI systems may always be vulnerable to infinite loops isn’t that they lack processing power: It’s that there’s a deeper difference between these systems and our own embodied, biological brains.

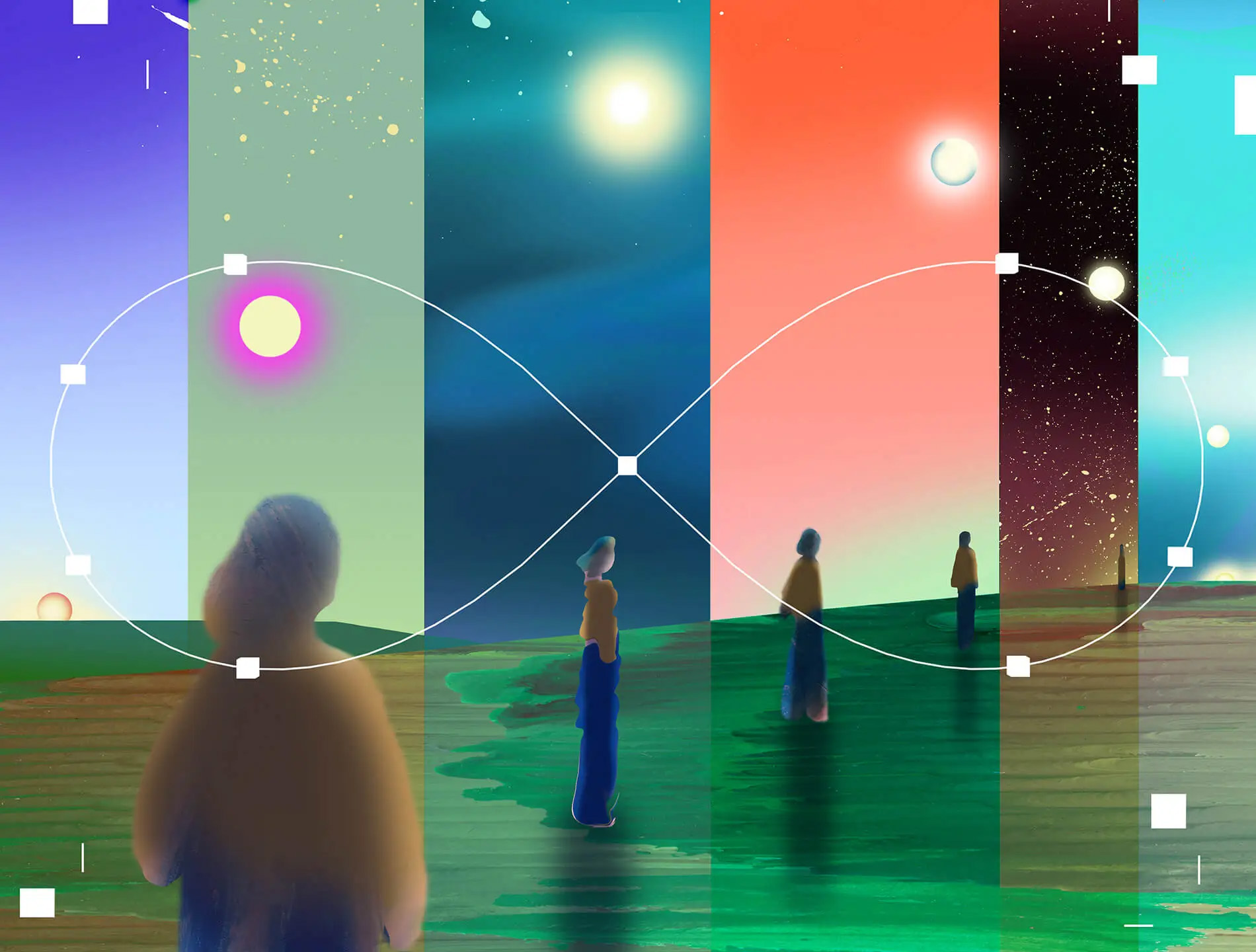

This difference starts with how computers and brains relate to time — and it ends with the everyday miracle of consciousness.

Intelligence and consciousness are distinct concepts — even if some of our tech leaders mistakenly assume that more of the former will inevitably lead to the latter. Put simply, intelligence is all about doing things, while consciousness is about being or feeling. But they are still related, at least in humans and other animals. My hypothesis is that some forms of intelligence — specifically the intelligence needed to fully escape the spectre of infinite loops in an open-ended world — rely on the capabilities that come with a conscious mind deeply embedded in the flow of time.

Anchored in time and entropy

While stacking levels of self-monitoring might be enough for a satisfactory jet bridge, this isn’t how humans handle complexity. Living, conscious creatures such as you and I survive and even thrive in environments far less predictable than airports without needing ever-stranger loops. In fact, human minds, marvelous as they are, struggle with recursion. About the most that most of us can handle are three levels: knowing that we know that we know. For example, I can sometimes feel confident in my ability to know when I’m correct or mistaken. Our impressive capability for adaptive behavior in an open-ended world must, it seems, be based on something else.

What could this “something else” be? One possibility is that our minds and brains — and our conscious experiences — are anchored in time, and in entropy, in ways that algorithms, by design, are not.

We are human animals, sharing with other living creatures the need to continually fend off the descent into decay and disorder mandated by the second law of thermodynamics. This law says that the entropy of an isolated system can only ever increase (or remain the same), with entropy being a measure of disorder or randomness. A drop of ink will spread throughout a glass of water and never reform, and a broken egg never makes itself whole again. It is a fearsome law, as the physicist Arthur Eddington recognized: “If your theory is found to be against the second law of thermodynamics, I can give you no hope; there is nothing for it but to collapse in deepest humiliation.”

A crucial point about the second law is that it applies to isolated systems, such as a sealed thermos, where there is no exchange of energy between the system and its surrounding environment. In open systems, where energy exchanges can happen between the system and its environment, it becomes possible to reduce entropy.. Living systems are canonical examples of open systems, using energy from their environments to maintain themselves in the statistically surprising condition of being alive.

This process unfolds in various ways and at different levels. The cells in our body continually regenerate the conditions for their own existence through the energetic flux of metabolism. According to some prominent ideas in neuroscience, notably the free energy principle, neural circuits continuously work to minimize the statistical surprise of sensory inputs, to reduce the entropy of these inputs, and in doing so, impose a kind of predictive control on the body’s physiological condition.

Conscious experiences, too, directly reflect this fundamental drive to stay alive. Every conscious experience brings together an enormous amount of survival-relevant information in ways that guide our behavior, whether voluntarily or not. And all those experiences arguably contain a tinge of valence, a dose, however small, of positive or negative feeling, which may nudge us to behave in certain ways. As I wrote in my book Being You, we experience the world around us, and ourselves within it, with, through, and because of our living bodies.

These multiscale processes are deeply intertwined, and each is intimately connected to time, though in different ways. Metabolic processes unfold in biochemical time, perhaps one billion reactions per second in each cell in our bodies. Neural time is slower but equally constrained by the limitations and adaptations of neurobiology: the myelin-accelerated exchange of action potentials and the slow diffusion of neurotransmitters. In the stream of consciousness, the passage of time is continuous, irresistible, and complex, encompassing not only succession but also flow, duration, and persistence. Time in our bodies, brains, and minds is rich, dynamic, multidimensional, and deeply interdependent at all levels, from biochemistry to personal identity. Our entire way of being is inescapably embedded in physical time, and physical time itself is, many physicists believe, anchored in the second law of thermodynamics.

AI’s insatiable appetite

The contrast between our brains and computers is stark and revealing. For a digital computer, time is thin, unidimensional, and abstracted away from its thermodynamic arrow. Computation is all about state transitions: A leads to B, zero to one. In Turing’s classical form of computation, only sequence matters, not the underlying dynamics of whatever material substrate lies underneath. Every algorithm is just one damn state after another. There could be a microsecond or a million years between two steps in an algorithm — between A and B — and it would still be the same algorithm, the same computation.

This disregard for time is costly. While computation, as Turing formalized it, exists out of time, computers themselves do not. The insatiable energy appetite of today’s AI is largely due to the demands of error correction — the need to keep ones as ones and zeros as zeros — since even the dead sand of silicon cannot escape the tendrils of entropy. Pretending that time doesn’t exist is an expensive business.

Here lies the key reason why I think algorithms can get stuck in infinite loops, while intelligent animals almost never do. For algorithms, frozen in sequence space and disconnected — at least in principle — from the pull of entropy, there will always be some unforeseen and unforeseeable circumstance in which time itself ends (or, more likely, the power runs out) before the computation concludes.

Unlike computers, we are beings in time — embodied, embedded, and entimed in our worlds. We can never be caught in infinite loops because we never exist out of time. The constant, time-pressured imperative to minimize statistical surprise and maintain physiological viability is, for living creatures like us, the ultimate relevance filter, the reason we almost always find a way through.

I say “almost always” because there are many examples of behavioral repetitiveness that involve a kind of loopiness, even if it’s not infinite. These range from perseverative behavior after damage to the frontal lobes, obsessive-compulsive disorders, repetitive actions in severe autism and in Tourette’s syndrome, all the way to self-destructive forms of addiction. In a sense, these examples bolster my argument because they reflect disturbances in “normal” neurocognitive function.

If these ideas are on track, then AI systems reliant on Turing computation will forever lack some forms of embodied and entimed intelligent behavior. And if this is true, then the prospect of artificial general intelligence — one that can match or surpass human performance in any cognitive task — becomes even more of a chimera than it already is.

The myth of conscious AI

Could new forms of AI relate to the flow of time in richer ways than allowed by classical computation? There’s a long history here, and an exciting future. The earliest computers — such as the Antikythera mechanism, a 2,000-year-old device for making astronomical predictions— were analogue, operating in continuous time. At the other end of history, researchers are exploring methods such as mortal computation, where computational processes are allowed to depend on the specific hardware that implements them, so that when the hardware “dies,” the algorithm dies, too. This approach promises major gains in energy efficiency by embracing, rather than resisting, the vagaries of the second law of thermodynamics. There are also many flavors of neuromorphic computing, which, to varying extents, mimic how the human brain works and are more grounded in time, compared to Turing’s timeless benchmark.

Approaches to intelligence grounded in dynamical systems eschew computation altogether, focusing instead on concepts like attractors and phase spaces that have a much deeper respect for time. These approaches trace back to the early days of AI and the historically overlooked tradition of cybernetics, which focused on feedback and control rather than on algorithmic symbol processing.

There’s a great deal to be gained from these approaches and many new varieties of AI to be discovered. But still, I doubt that even entimed computation or cybernetic engineering will completely escape the shadow of infinite loops or fully deliver on the kind of open-ended, adaptive intelligence that biological systems achieve.

That type of intelligence, I believe, is intimately and perhaps necessarily tied up, not only with time, but also with consciousness. At least, if you conceptualize consciousness the way I do — as a process deeply linked to the drive to survive. In this view, conscious experiences integrate all the way from a metabolic resistance to entropy to perceptual opportunities for action and planning, always inextricably embedded in the flow of time. It is this multiscale integration that is the difference-maker, the reason why conscious experience could be the currency with the credit sufficient to escape almost any infinite loop and to reframe any frame problem.

Other proposals have linked consciousness with open-ended intelligence, though without focusing on time and entropy. Twenty years ago, the cognitive scientist Murray Shanahan — inspired by the global workspace theory of consciousness — proposed that consciousness provides the resources necessary to solve, or at least sidestep, the frame problem by combining elements of cognition and perception in different ways. Theoretical biologists Eva Jablonka and Simona Ginsburg have argued that consciousness is associated with what they call unlimited associative learning, a particular form of learning that enables the open-ended discovery of novel solutions. Altogether, the picture emerging is one where some forms of intelligence — at least in living creatures and perhaps in any context — may require consciousness.

It’s also worth briefly flipping the perspective. If conscious experiences depend on being deeply embedded in time and on the metabolic imperative to stay alive in a universe governed by entropy, then this has serious implications for the future of AI. Specifically, the temporally thin nature of classical digital computation — one state after another, with the inter-state time interval being totally arbitrary — seems fundamentally incompatible with the nature of consciousness as a richly dynamical process. If consciousness is inextricable from physical time, as it seems to be, then it cannot be a matter of algorithms alone. This hammers one more nail into the coffin for the idea that “AI consciousness” is coming anytime soon — if another nail is needed.

Toward the end of his life, the philosopher Daniel Dennett was fond of talking about the “hard questions” in consciousness science, in playful contrast to David Chalmers’ famous “hard problem” of how consciousness occurs at all. For Dennett, the hard questions are all about the functions of consciousness — not what it is, but what it does. As he put it, a conscious experience occurs — “and then what happens?”

Perhaps the answer lies in time. Consciousness may be nature’s way of allowing its intelligent creations to keep going, to (almost) always find a way.

I am grateful to Tim Bayne, Robert Chis-Ciure, Stephen Johnson, and Ishan Singhal for comments on a first draft of this article. Preparation of this essay was supported by the European Research Council through an Advanced Investigator Grant, number 101019254. I am an advisor to Conscium Ltd and AllJoined Inc.

This article is part of Big Think’s Consciousness Special Issue. Read the whole collection here.