It’s 2027. You just built a complex new website for a client — by yourself, in just one week. A decade ago, a project of this scope would have taken a team of software engineers months to finish, but using the latest AI coding tools, you got it done fast and solo.

Learning to code

“Learn to code.” For decades, that was the go-to advice for anyone entering the workforce or looking to make a career change.

Software engineers earned higher-than-average starting salaries, and as the digital world exploded in size, demand for their skills seemed endless. The kicker? Coding was pitched as something anyone could learn in just a few months, thanks to online bootcamps.

The reality turned out to be a little different — not everyone is wired for the kind of linear thinking coding demands — but still, software engineering seemed like a solid career choice in the early 21st century, especially for anyone with the aptitude and hustle to beat the competition for coveted entry-level positions.

But now, practically anyone can code: All they have to do is tell an AI what they want to build — like a new version of the Freethink website — and it’ll translate their words into working software in minutes.

According to the Bureau of Labor Statistics, employment for computer programmers is now expected to shrink by 10% between 2023 and 2033 — a loss of more than 13,000 jobs — and unemployment rates for recent computer science graduates are already on the rise.

So, is there any point in learning to code anymore?

In search of answers to those questions, this week’s Future Explored is taking a look back at the history of programming and talking to the creator of the most popular AI coding assistant in the world to find out whether he thinks “learn to code” is still worthwhile advice.

Where we’ve been

Like so many transformative technologies, the roots of computer programming can be traced to the US military.

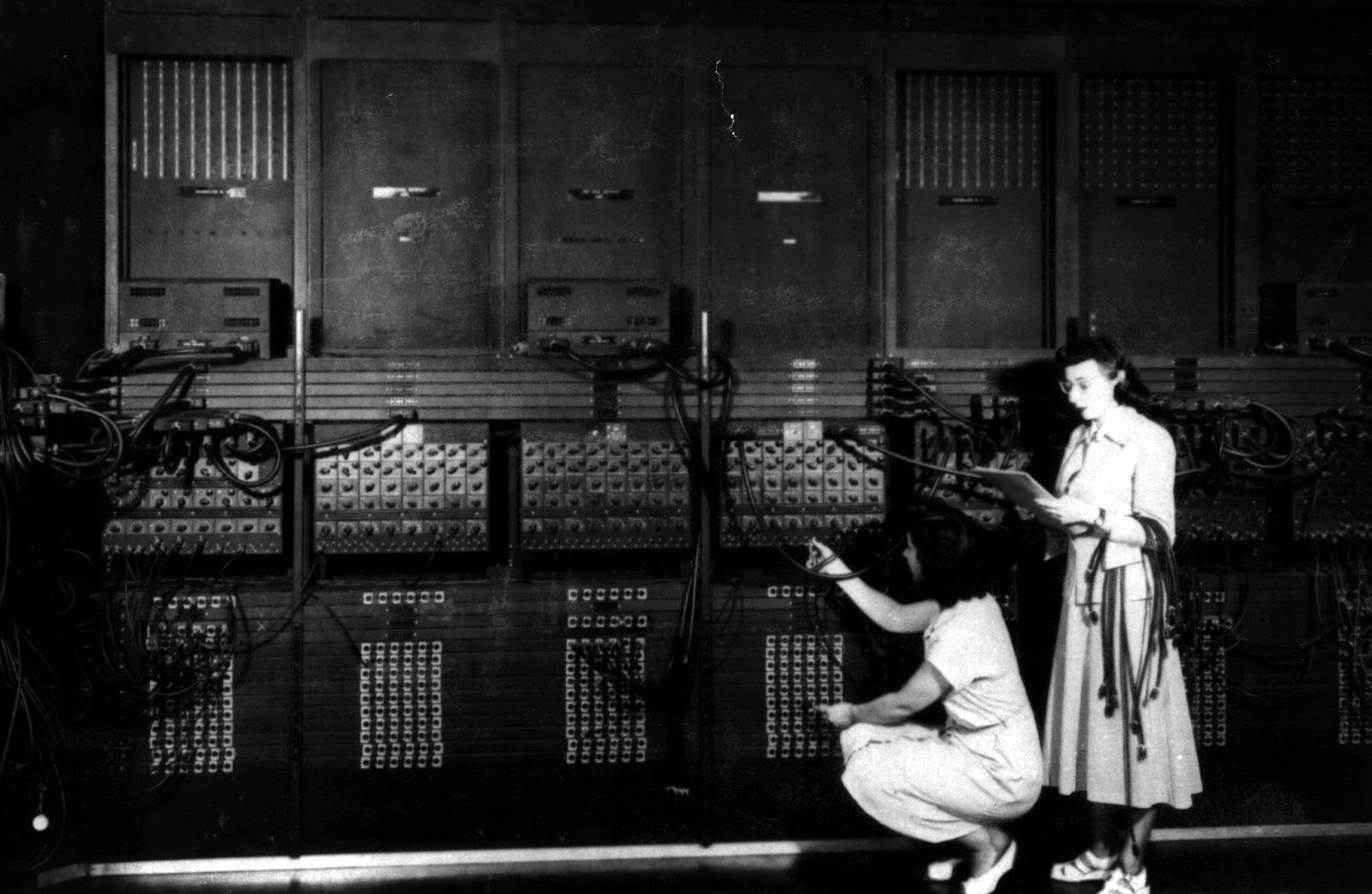

Artillery soldiers need to consider a lot of factors when aiming their weapons, including distance, shell weight, and wind speed. To save them time on the battlefield, the US Army funded the development of the Electronic Numerical Integrator and Computer (ENIAC), a machine designed to generate charts that soldiers could use to quickly determine their targets.

Getting ENIAC to perform the calculations needed to create these charts was a tedious process.

Mathematicians first had to figure out on paper how ENIAC’s hundreds of switches and cables needed to be configured in order to run the desired calculation. After making those changes manually, they’d use punch cards — sheets of stiff paper with precisely placed holes — to feed data into ENIAC. It would then perform the calculation and deliver its results as a new punch card that the mathematicians could analyze.

That whole process could take weeks and had to be repeated for each calculation, but in 1948, ENIAC was modified to store a simple program internally, which eliminated the need to rewire the computer for every calculation. This sped up the process, but a human still needed to calibrate the machine and handle the input and output of punch cards.

By the end of the 1950s, the first higher-level computer programming languages had emerged. Now, instead of using punch cards to communicate with computers, people could type their commands into a teleprinter. The computer’s results would print almost instantly, but a mistake could mean having to retype entire lines of code — still a time-consuming process.

Then came the rise of video monitors in the ‘70s. This was a huge turning point as it meant programmers could edit their code right on a screen, moving the cursor to fix bugs without having to retype entire segments of their program.

The arrival of personal computers in the ‘80s then democratized computer programming. Instead of being accessible only to people connected to universities, government offices, and other big institutions, anyone with a PC could code from the comfort of their own home.

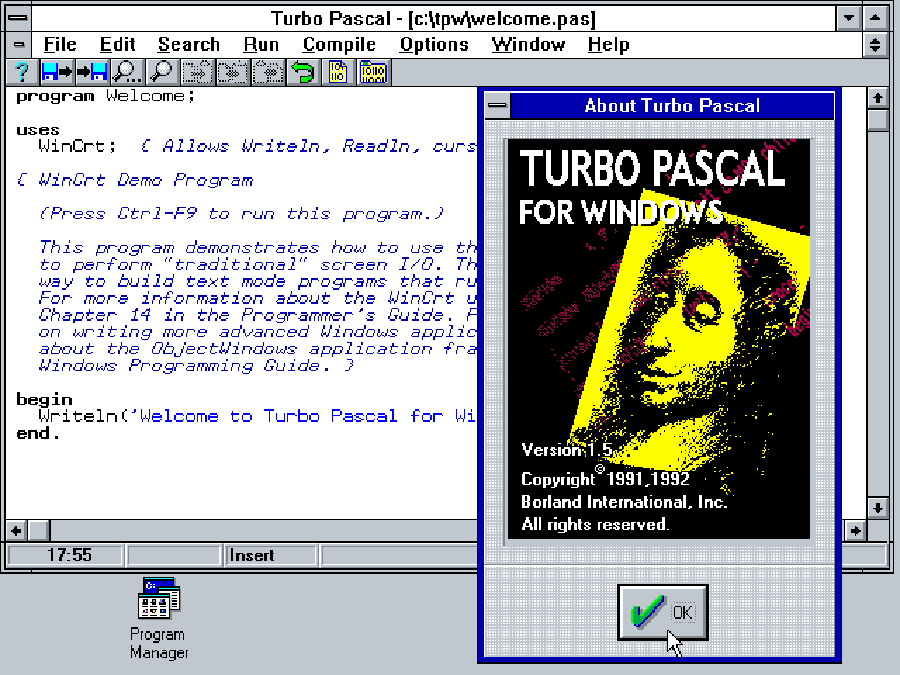

This created a market for new programming tools, such as integrated development environments (IDEs) — platforms that combine editing, debugging, and compiling into one interface, helping programmers work more efficiently.

The internet then gave all those new coders access to a whole network of other programmers to learn from and collaborate with. It also created demand for a lot of new things to code: People wanted websites, games, e-commerce platforms, and new communication systems.

Many new programming languages were also born in the late 20th century. Some were specialized for certain applications, like web development, while others were designed to make programming more accessible to beginners.

Before the turn of the century, many programming tools were already offering basic autocomplete functions, suggesting lines of code to programmers. By the 2010s, some of these autocomplete systems were advanced enough that they could use context — the code around the code — to make more intelligent suggestions.

Where we’re going (maybe)

We’re now entering a new era in programming: By training generative AI models on huge databases of code, developers have created “coding assistants” that can write whole blocks of code in response to a user’s prompt.

Microsoft subsidiary GitHub — a platform used by more than 150 million developers and four million organizations — was at the forefront of discovering the transformative potential of generative AI in software engineering.

“We started exploring this whole topic in the middle of 2020,” GitHub CEO Thomas Dohmke told Freethink. “GPT-3, OpenAI’s then state-of-the-art model, had just gone into early preview. We got access and started playing with the model and, on a Zoom call like this one, we asked it to write little code snippets, methods or functions, in different programming languages.”

“It was able to write proper code, putting the parentheses, colons, and whatnot in the right places, and it could differentiate between different programming languages,” he continued. “It wasn’t making mistakes when asked to write a method in Python or a function in JavaScript.”

Following the preview, GitHub started building a coding assistant, now known as Copilot, on top of Codex, an AI model that OpenAI had fine-tuned specifically for programming.

“From June 2020 to June 2021, we shipped the first preview of Copilot, and a year later, we launched general availability to over a million users,” said Dohmke. “By November 2022, ChatGPT arrived and changed how AI systems are seen, but Copilot was already in the market.”

The following year, GitHub added a chat feature to Copilot, enabling users to communicate with the AI using conversational language, just as they would with a fellow developer while collaborating on a project.

Then, in February 2025, it introduced Copilot agent mode. Instead of assigning Copilot a single task at a time, users could now give it a broader goal. Copilot would break that goal into subtasks, handle them independently, and complete the work without pausing for step-by-step guidance.

You can think of it like the difference between telling someone to crack an egg into a bowl and telling them to bake a cake. In agent mode, Copilot will even check its own work and resolve any issues it finds — that’s like the person tasting the batter and realizing they need to add more vanilla.

This ability has greatly increased the amount of code developers can delegate to Copilot.

“In early previews, Copilot was writing about 25% of code — a year later, it was over 40%,” said Dohmke. “Today, depending on how you use Copilot and agents, it can write all the code for certain scenarios.”

GitHub Copilot quickly gained traction with the coding community, growing at a fourfold annual rate in its first years on the market and surpassing 15 million users in 2025.

Between it and all the other AI coding tools now available, a lot of code once written by people is now being generated by AI — in 2024, Google CEO Sundar Pichai said about 25% of the company’s new code was AI-generated, while Microsoft CEO Satya Nadella said his company was at about 20-30% as of April 2025.

Many experts predict that number will rise quickly. Microsoft CTO Kevin Scott told 20VC podcast host Harry Stebbings in April that he expects Microsoft will be up to 95% by 2030. Dario Amodei, CEO of AI startup Anthropic, expects an even faster transition.

“What we are finding is that we’re three to six months from a world where AI is writing 90% of the code, and then in 12 months, we may be in a world where AI is writing essentially all of the code,” Amodei predicted in March 2025.

Regardless of the speed, the trend is clear: More and more of our code is going to be written by AI — so is “learn to code” officially bad career advice?

“We’re moving toward personalized apps made just for individuals.”

Thomas Dohmke

Not at all, according to Dohmke — we just have to adjust our thinking around what it means to know how to code on a professional level.

Eighty years ago, it meant knowing how to adjust the wires and switches on a 30-ton machine — a skill only a handful of people possessed. Forty years later, programmers were people who knew what functions to type into a PC — thousands of people made a living doing that, while countless others coded on an amateur level.

Now, coding requires little more than the ability to type — or even just speak — your software idea to an AI. That means millions of people now have the basic skills to become programmers, and Dohmke predicts they’ll use those skills to create software solutions to all kinds of problems.

“We’re moving toward personalized apps made just for individuals, like an app you might build to prep for this interview or plan a trip to Paris,” Dohmke told Freethink. “These are things companies would never build for individuals, but AI enables us to.”

Essentially, “learn to code” will mean “learn to prompt,” and if we’re smart, that’s something we’ll start teaching students as early as elementary school, according to Dohmke.

“Kids already naturally learn by asking questions,” he told Freethink. “They’ll grow up with AI agents like Copilot, just like Gen Z grew up with smartphones. I grew up with books and magazines to learn coding…Prompting will become a natural skill.”

“Developers will take on bigger, more complex challenges.”

Thomas Dohmke

According to Dohmke, we will still need professional software engineers who know the ins and outs of writing code, but just like previous advances in software engineering, the nature of their work is going to change.

“We’ve always moved up the abstraction ladder, from punch cards to BASIC to Turbo Pascal to internet collaboration and open-source projects,” he told Freethink. “AI is the next level: translating natural language ideas into code, breaking problems into smaller blocks, integrating with existing codebases.”

Each time we’ve moved up that ladder, the people on the lower rungs have had to adapt. Punch card programmers, for example, could either learn higher-level computer programming languages or look for another line of work.

Still, during past transitions, programmers had years, if not decades, to reskill — some companies were still using punch cards into the 1980s.

The transition to AI-generated code is happening on a much shorter timeline, and it’s also far broader — AI isn’t just changing how we write code, it’s automating the entire software development pipeline, from generation to deployment and even maintenance.

Ultimately, as AI takes over more and more of the work typically handled by entry-level programmers, breaking into the field will become increasingly challenging. But as the bar rises, so will the ceiling for what a single developer can achieve.

“Problems that are easy to solve with AI won’t have business value because anyone can do them, but developers will take on bigger, more complex challenges,” Dohmke predicts. “We’re not replacing ourselves; we’re evolving.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].