Columbia University researchers have built a robot hand that can deftly manipulate objects without seeing them — allowing it to work in complete darkness.

The challenge: We’ve designed our environment around the dimensions and capabilities of the human body, so for robots to navigate our homes and workplaces, some believe we need to make the bots as “human” as possible.

One of the biggest challenges with that has been replicating the human hand, which has 27 degrees of freedom and incredible touch sensitivity — this allows us to manipulate objects while also knowing exactly how much pressure to use to grasp them.

To give their robots a sense of “touch,” some developers equip them with cameras so that the bot’s brain can analyze footage of an object and tell the hand how to grasp it. But cameras require sufficient lighting, which isn’t always available, and appearances can be deceiving — an object that looks firm might be flexible, for example.

“Robot hands can also be highly dexterous based on touch sensing alone.”

Matei Ciocarlie

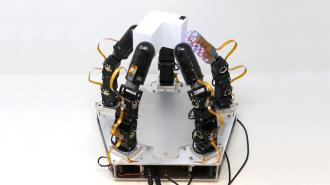

What’s new? A team at Columbia University has now unveiled a new robot hand, and while it doesn’t exactly look human (it has five identical fingers sticking up from a flat, pentagon-shaped base), it is nimble and able to feel objects without seeing them — just like our hands.

“In this study, we’ve shown that robot hands can also be highly dexterous based on touch sensing alone,” said co-author Matei Ciocarlie.

A paper detailing the work has been accepted for publication at the 2023 Robotics: Science and Systems Conference in South Korea and is currently available as a preprint on arXiv.

How it works: The new robot hand builds off advanced touch-sensing technology the Columbia researchers unveiled in 2020 as a single robot finger.

That finger featured many small lights shining outward. By measuring how those lights bounced off the inside of a skin covering the finger, the researchers could tell when pressure was being applied to the outside of the digit, allowing them to give the finger a sense of touch.

For their new study, the researchers incorporated that tech into five fingers. They then taught the fingers to work together by training algorithms in a computer simulation that replicated the physics of the real world.

In that simulation, the algorithms practiced using a computer model of the hand to rotate objects, learning through trial and error (a technique called “reinforcement learning”). Once trained, the algorithms were given control over the physical robot hand.

To demonstrate the system, the researchers had the robot hand grasp and rotate an unevenly shaped object between its fingers. This seemingly simple task is challenging because the bot must constantly reposition some of its fingers while using others to prevent the object from falling.

Not only was the robot hand able to ace the test, it was also able to do so in the dark, demonstrating how it relies on its sense of touch — not sight.

Looking ahead: In their paper, the researchers note that many real-world situations will likely benefit from bots that rely on both touch and sight, so while their robot hand doesn’t need cameras to manipulate objects, they still plan to give it a vision system.

“Once we also add visual feedback into the mix along with touch, we hope to be able to achieve even more dexterity, and one day start approaching the replication of the human hand,” said Ciocarlie.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].