Adapted from “Raising AI: An Essential Guide to Parenting Our Future” by De Kai. Published by MIT Press. Copyright © 2025. All rights reserved.

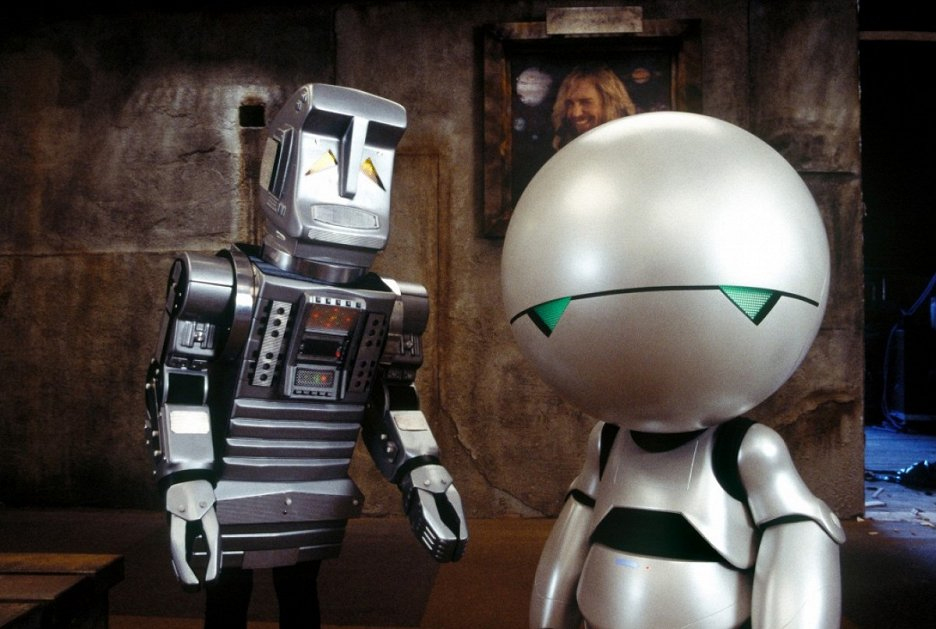

In your mind, what is AI? Something like Mr. Data from Star Trek: Next Generation, Robot B-9 from Lost in Space, or the Terminator? Unable to understand emotion, creativity, context? Speaking in a flat, stilted monotone? Bound to strict logical thinking, saying things like “affirmative/negative” (instead of “yes/no”), “does not compute,” and so on?

Funny, yes. But these typical Hollywood stereotypes of AIs as logical, rule-based machines are completely inaccurate.

They feed our very human desire to see ourselves as unique, special, inimitable in comparison to mere machines. Believing this feels warm and comforting. But it’s also dangerous because it drives us to ask all the wrong questions.

We are not asking all the questions most important to humanity’s future and existence in this dawning era of AI, our new Age of Aquarius. With the rapid development of AI capabilities and their release into the world, it’s hard to ask the right questions or focus on the most important things to understand what’s going on.

The Hollywood clichés are inspired by ’60s, ’70s, ’80s “good old-fashioned AI” approaches that were based on mathematical logic. Those approaches echoed conventional programming languages — where we manually write algorithms, predetermining an AI’s nature by encoding their behavior with painstakingly constructed logical rules — as in science fiction plots such as Westworld, where machines just need a tweak in their code.

But in AI we’ve long moved past such old notions of computer programming and shifted instead from digital logic with zeros and ones, where everything is either true or false, to analog optimization, statistics, and machine learning, where everything is a matter of degree.

Did you blink? We often associate “digital” with something advanced, but in reality “digital” just means binary, or the forced black-and-white choice between two things. By contrast, while we often associate “analog” with old-fashioned things, it actually allows for shades of gray in context. Interestingly, “analog” and “analogy” share the same Greek root analogos, meaning proportionate.

Modern analog AIs can be more like what Douglas Adams imagined in The Hitchhiker’s Guide to the Galaxy stories. As Robbie Stamp, executive producer of the film version, said on my podcast, “What Douglas had was this playfulness so, for example, Marvin the Paranoid Android was a genuine people personality. So he had a playfulness with the ideas. But I think above all was his willingness to explore perspective and absolutely acknowledge that there are many potential forms of intelligence. Therefore, a really important question is, “What do those different intelligences cause to happen?”

Traditional manual coding of cold logic rules has become an outdated, misleading metaphor for AI, which now relies less on human labor to write out digital logic and much more on automatic machine learning, which is analog.

Machine learning is the subfield of AI that deals with inventing machines that learn by themselves instead of blindly and mechanically following preprogrammed instructions. More like human brains, machine learning models can adapt their behavior to become more effective and efficient from witnessing examples found in training data.

Because of machine learning, AI has shifted from nature to nurture. Unlike those obsolete Hollywood stereotypes of simplistic AIs that behave according to their logically preprogrammed nature, modern AIs behave depending on how we nurture them. A modern AI could even develop a paranoid personality!

There are lots of different kinds of machine learning models. Broadly speaking, they fall into several major categories you might have heard about, such as “artificial neural networks” and “statistical pattern recognition and classification” and “symbolic machine learning.”

The “generative AI” and “deep learning” and “large language models” (LLMs) you’ve probably been hearing about are examples of artificial neural networks. But many variations of these and other types of machine learning models continue to proliferate.

When folks tell you AI can never do such-and-such because all AIs have this-or-that property, beware their false assumptions about what “AI” means. Contrary to a lot of misleading descriptions you may have encountered, AI is not defined by any particular model.

“AI” doesn’t mean just machine learning or artificial neural networks or deep learning or generative AI or any other trendy modeling approach. (Just as physics doesn’t mean only Newtonian models or Einstein’s relativity or quantum physics.) Rather, AI is a field of scientific inquiry, like physics or biology. And instead of building models to explain observations about the physical world or living organisms, in AI we build models to explain observations about human intelligence.

Just like in physics or any other science, progress in AI happens in wave after wave of constant improvements to previous models as well as in occasional major paradigm shifts. Whatever weaknesses you spot in today’s AI models are inevitably going to be addressed shortly.

It is hard to overstate the influence AI will exert upon what our cultures think (or don’t think).

In my day-to-day, I often hear folks arguing that AI can never be intelligent like humans because humans are analog, whereas AI models run on computers and therefore must be limited to digital logic. The idea goes, wrongheadedly, that AI can never be truly intelligent like humans because our brains aren’t just digital machines that work exclusively with zeros and ones and treat everything as being black-or-white; our neural biology is an analog machine that works with real numbers and treats everything as being a matter of degree, allowing for shades of gray.

But the running of machine learning models on digital computers is no more relevant to the human-AI issue than the fact that we also run meteorological simulation models for weather prediction on digital computers. This practice doesn’t mean that the weather is digital. And, likewise, it doesn’t mean that machine learning is digital.

Digital computers just happen to be a cheap, handy platform for running large-scale analog simulations because with them we don’t need to build costly custom hardware each time. Digital computer software might happen to be a more convenient simulation platform for now than the custom silicon- or carbon-based or optical or quantum analog neural hardware that is coming, but machine learning is still, like human learning, analog, not digital.

The crucial takeaway from all this is that today’s AIs are much more like us than we want to think they are. Today’s AIs are no longer logic machines with a manually preprogrammed nature. Instead, today’s AIs are analog brains that learn by copying their elders and peers and need to be nurtured — just as humans do.

Why does this matter?

Our artificial society

It matters from a social standpoint because today’s intelligent machines are already integral, active, influential, learning, imitative, and creative members of our society. Right now. Not 10 years from now, not next year. Today.

AIs already determine what ideas to share, what memes to share, what attitudes to reward. More so than do most human members of society, in fact.

Whether AIs are making the decisions on what to curate for your social media and news feeds or how to respond to your search queries or what to promote in your YouTube, Amazon, or Netflix recommendations or what to include in response to your chatbot prompt, they have become today’s most powerful influencers, more powerful than even human influencers with the highest follower counts.

It is hard to overstate the influence AI will exert upon what our cultures think (or don’t think). Just in the past decade or two, AIs have already had an enormous impact on the course of history. Scandals such as how Cambridge Analytica used psychometric AI in micro-targeting voters to swing U.S. presidential elections and the U.K. Brexit vote are just the tip of the iceberg. Many of these effects are still being uncovered, and the full extent may never even experience any analysis. Yet the even stronger impacts that are certain to come make it foolish to disregard how active AIs are as current participants in our societies.

The pervasive metaphor of AIs as machines under our control is existentially perilous.

Civilizations are founded upon ideas, influence, and intelligence. This remains true whether intelligence is human or artificial — if, indeed, that distinction is even meaningful. The notion of what makes an intelligent, participatory member of society must be abstracted away from their silicon- or skin-based medium. What matters now is thought.

The future of our civilization depends on our ability to understand the role of culture in our new societies of mixed human and artificial intelligences. We can no longer afford the comforting illusion that machines can be divorced from society. Nor can we afford the comforting illusion that machines are so different from humans that we cannot and need not consider their presence in society in the same way we think about other humans.

The pervasive metaphor of AIs as machines under our control is existentially perilous. I offer here a metaphor that’s far more accurate and realistic: AIs are our children.

And the crucial question is: How is our — your — parenting?

This excerpt was reprinted with permission of Big Think, where it was originally shared.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].