University of Cambridge researchers have developed a “touchless touchscreen” for vehicles that predicts what a driver wants to tap on a digital display before their hand actually makes contact.

In testing, the system reduced the amount of time a driver spent interacting with their display by 50% — meaning it could help address distracted driving, minimizing the amount of time a drivers’ focus is off the road.

Distracted Driving

Every year, about 3,000 people in the U.S. die because someone was distracted driving — instead of watching the road, they might have been looking at their phone, fiddling with the radio, or typing an address into their navigation system.

Cambridge’s touchscreen might not be able to get smartphones out of drivers’ hands, but it could minimize the amount of time they spend on those other, more driving-adjacent tasks.

The university developed the “‘predictive touch” tech powering the system as part of a collaboration with Jaguar Land Rover.

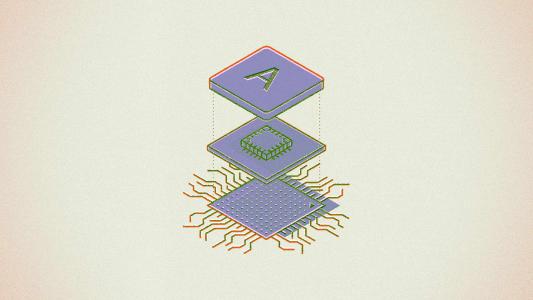

This tech uses a combination of sensors — including eye-gaze and gesture trackers — to monitor a driver’s movements, feeding the data to an AI-powered program.

When the program senses that a driver is reaching toward the vehicle’s display, it predicts what they’re most likely trying to do, taking into account the sensor data as well as contextual information, such as the current environmental conditions.

To test their predictive touch tech, the researchers turned to driving simulators and road trials.

Based on the data from these tests, they determined that predictive touch reduced the time drivers spent interacting with their displays by half, quickly and accurately guessing where people wanted to touch.

That accuracy held up even when tested in moving cars, on bumpy roads, and by people with hand tremors, such as those with Parkinson’s disease.

With a standard touchscreen, those variables can cause a driver to tap the wrong spot on a display — and the time they then have to spend correcting the mistake is more time that they’re distracted driving.

Predictive Touch for Everything

Cambridge and Jaguar aren’t the first to explore the use of gesture recognition tech in vehicles — BMW started selling cars equipped with it in 2016, and since then, several other automakers have followed suit.

Predictive touch reduced the time drivers spent interacting with their displays by half.

But the ability of those systems to cut down on distracted driving is limited to the number of people willing to buy the cars — any touchscreen can reportedly be retrofitted to work with Cambridge’s predictive touch system.

That means it could one day make everything from smartphones to ATMs “touchless” — and while that won’t do anything to keep drivers’ eyes on the road, it could help stem the spread of germs and save you from having to constantly wipe fingerprints off your devices.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].