The following is Chapter 8, Section 1, from the book The Techno-Humanist Manifesto by Jason Crawford, Founder of the Roots of Progress Institute. The entirety of the book will be published on Freethink, one week at a time. For more from Jason, subscribe to his Substack.

The acceleration that we saw in Chapter 6 is driven by a feedback process in which growth itself improves the mechanisms of growth.

When we zoom out to look at thousands of years, this process looks smooth and continuous. But when we zoom in, it appears as discrete improvements. Some of these are small, like a new type of machine tool. Some are large, like electric power—such a fundamental enabler of other improvements that we call it a “general-purpose technology.”1 And some are so fundamental that they reorganize civilization itself.

The last category, however, is rare. There have only been three basic modes of production in human history, which define the three main eras: the stone age, the agricultural age, and the industrial age.

Is industrial production the end of economic history? Or could there be a fourth age of humanity? And what would such a future look like?

Recall that annual growth rates in world GDP were less than a hundredth of a percent in the stone age, a fraction of a percent in the agricultural age, and single-digit percentage points in the industrial age. If this pattern continues, a fourth age would eventually produce sustained double-digit growth, meaning a world economy doubling time measured in years. Such a massive shift could only come from a technology that is as fundamental to production as tools in the stone age, farming in the agricultural age, or engines in the industrial age.

In the year 2025, the best candidate for such a technology is AI.

The promise of AI is that it could be to cognitive work what power and mechanization were to physical work: a technology that automates much of it and assists with most of the rest. Someday, AI could help perfect robotics, which would let us automate most of what remains of physical labor as well.2 Anthropic CEO Dario Amodei has predicted that sufficiently powerful AI would be like a “country of geniuses in a data center” and could accelerate fields such as biology and medicine by 10x, helping us make a century of progress in those fields in a decade.3 OpenAI CEO Sam Altman calls the AI future the “intelligence age.”4

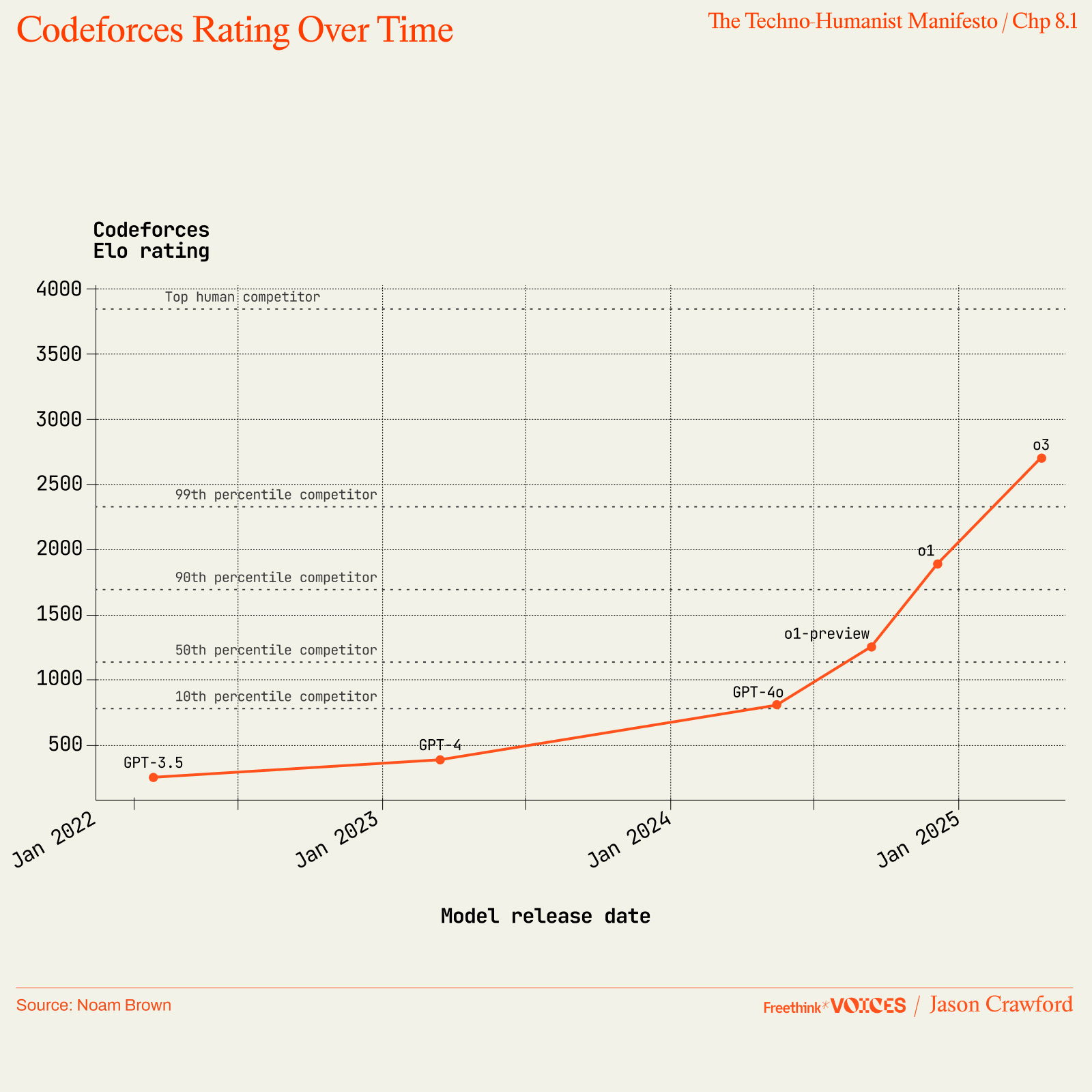

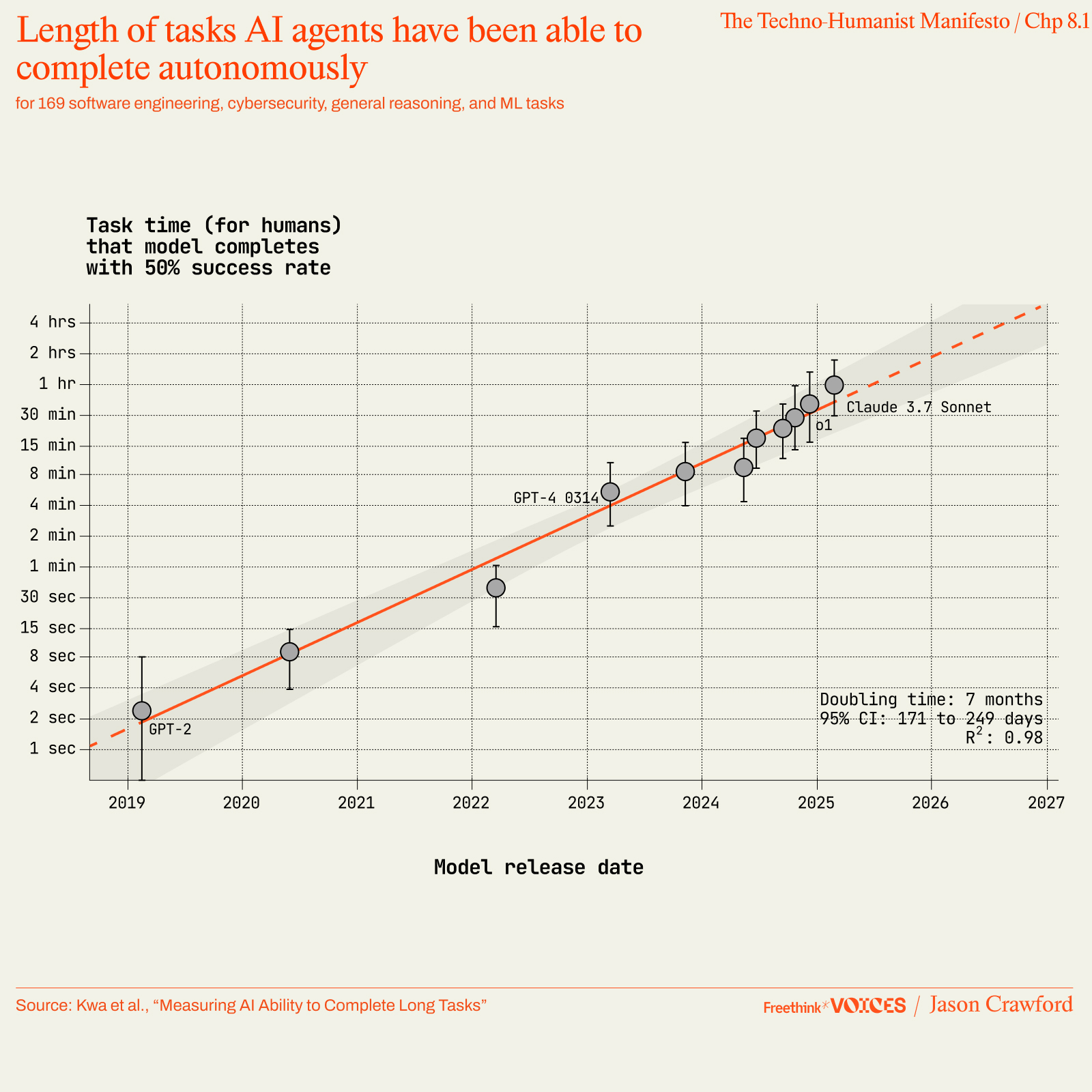

These are bold projections. To date, AI is far from fulfilling them—but it is improving extremely rapidly. In February 2019, GPT-2 was announced, and it was remarkable for being able to form grammatical sentences and coherent paragraphs.5 When GPT-3 came out in November 2020, you could actually have a conversation with it.6 GPT-4, released in March 2023, scored around 90th percentile on standardized tests such as the SAT and LSAT, and even passed the bar exam.7 In the Codeforces coding competition, GPT-4 was ranked well below the 10th percentile of human competitors; 4o was at the 10th percentile; o1-preview was above 50th; o1 was well over 90th, and o3 is over 99th—“grandmaster” level.8 For AI models completing technical tasks such as software engineering, a study from METR assessed the length of task that they could reliably complete, measured by how long those tasks take human professionals, and found that the task length is doubling every 7 months.9 “Even if the absolute measurements are off by a factor of 10, the trend predicts that in under a decade we will see AI agents that can independently complete a large fraction of software tasks that currently take humans days or weeks.”

I expect this progress to continue, especially given the massive investment now pouring into AI research and ventures. AI is attracting many of the most talented, driven and ambitious workers in Silicon Valley, and hundreds of billions of dollars of capital, including in massive buildouts of infrastructure such as GPUs and the energy to power them.10

It has been said that already “AI is hitting a wall,”11 but I predict that the walls will crumble before we run into them—although to anyone who has not internalized the lesson of Chapter 7, it will happen unexpectedly, and mysteriously just in time. If we run out of training text, we’ll find more; if we can’t find more, we’ll develop models that learn more efficiently from data; if we approach the limits of efficiency, we’ll focus on other modalities such as video. If we run out of energy, we’ll develop more efficient chips; if we run out of chips, we’ll develop more efficient algorithms. When intuition plateaued, we developed “reasoning” models using chain-of-thought; when that plateaus, we will give them memory, or teach them to take notes, or to use the scientific method, or some other technique. We are problem-solving animals, and with this much money and talent at work, we’ll solve any problems that arise until AI reaches its full potential.

To see that potential, we don’t have to debate what is a “general” intelligence (AGI), or whether “superintelligence” is even a coherent concept. We don’t have to assume that AI will be able to perform all human tasks—after all, industrial machines transformed the world a long time ago, and even now, hundreds of years in, they still can’t perform all the physical tasks done by humans, or even all the ones done by horses. All we have to do is observe that AI can already perform a wide variety of tasks—from writing code to drafting reports to creating images—and expect that it will continue to get better at these tasks and many others like them.

Automation will make this work dramatically cheaper and faster. Many tasks and projects that were once prohibitively expensive in time or money will become accessible: building custom software applications, summarizing feedback from large surveys, translating entire books. Services that are now a luxury for the wealthy—legal advice, specialist doctors, tax assistance, psychotherapy, business coaching, personal tutoring—will become a commodity for almost everyone.

The speed and the 24/7, always-on accessibility of AI services make possible a much faster mode of working. Iterations are measured not in business days, but in minutes. To think of a question and to get the answer before you’ve even stopped wondering about it, or to have an idea and to get a prototype of it before you’ve lost the moment of inspiration, is powerful.

AI will also be more precise, reliable, and consistent than human labor—just as machines are when performing physical tasks. This, too, will lead to a new mode of working. In human teams, much effort is spent training new employees up to baseline competency and then maintaining their performance through regular evaluations—and still, employee performance can be much more dependent on inherent talent than on training or feedback. In addition, employees are constantly turning over. All of this creates so much variance that it limits how much the training can be optimized in practice.

A team of AI workers will be different: they will all be copies of an already-trained model, which will execute that training consistently and never quit. This will let us experiment with AI workers—say, a fleet of customer service representatives, or math tutors, or medical advisors—in a way that is possible with factory machines, but impossible with human employees. We can iteratively improve the workers, testing each change against a standard benchmark, monitoring against performance metrics in the field, and keeping what works: a tunable workforce. Once a mistake is fixed in training, the workers will never make that mistake again, so quality ratchets upwards.

AI services will also be far more dynamically scalable than human ones. As long as data centers have capacity, you’ll be able to spin up as much intelligence as you need, with no concern for all the problems of recruiting talent. When you’re done, you can stop using them, and stop paying for them, with no advance notice—and then restart them whenever you want, with no penalty or retraining. This is intelligence as a utility.

Faster, cheaper cognitive labor will be a boon to the economy at large, but most importantly, it will accelerate R&D. Recall from Chapter 7 that total factor productivity (TFP) is a key driver of economic growth, and that the resources going into R&D, especially the number of researchers, are a key driver of TFP. AI might thus not only make existing industries more efficient and scalable, but help to create entirely new industries based on new technologies and new science.

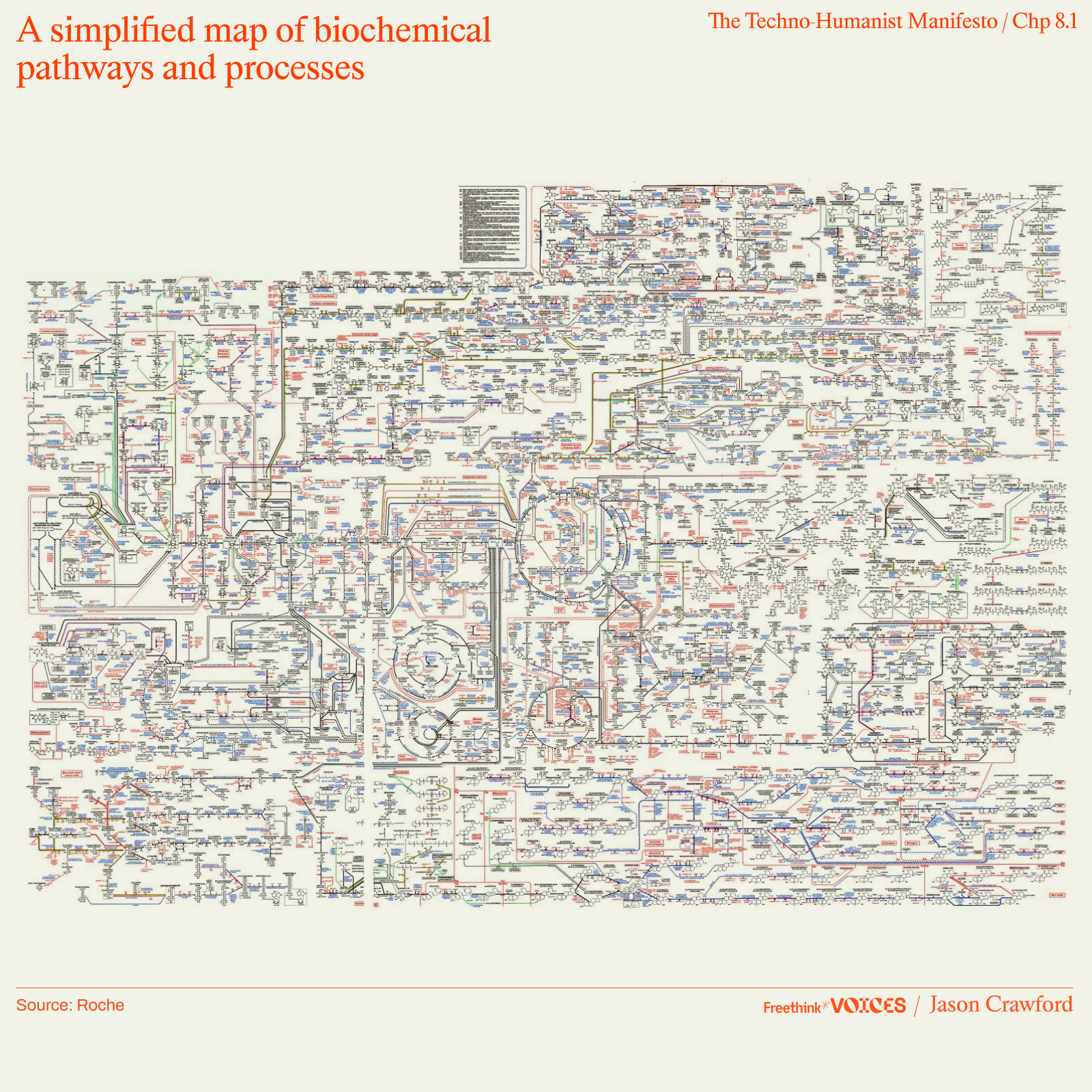

This potential is most evident in biotech. As described in Chapter 7, the space of small molecules, proteins, or genomes is unfathomably large. The mechanisms and behavior of biological systems, even single cells, are so complex as to virtually defy description.12 Already, researchers at the Arc Institute are training AI models to learn the patterns of DNA and to predict the behavior of cells; longevity startup New Limit is using AI to predict the effect of combinations of transcription factors on cellular aging and to prioritize experiments; and the research institute Future House is developing a set of AI agents that can review scientific literature, propose experiments, and analyze data.13

AI researcher Jacob Steinhardt has suggested that models trained on massive scientific data sets, such as astronomical images or DNA sequences, “might understand stars and genes much better than we do,” giving them “unintuitive capabilities … such as designing novel proteins.”14 But AI can help accelerate research even if this never happens. A lot of invention comes, not from sudden flashes of insight or deduction from theory, but from tinkering with possibilities at the edges of the known: Edison’s lab testing thousands of materials for the filament of the light bulb, Selman Waksman’s process screening over ten thousand strains of microbes to find streptomycin and other antibiotics, Carl Bosch’s chemical company testing tens of thousands of materials as catalysts for the Haber-Bosch nitrogen fixation process.15 AI researchers can accelerate science and technology simply by making it fast, cheap and easy to do literature reviews, run simulations, or test algorithms at large scale, and someday (via roboticized labs) to do real-world experiments.

So if you are skeptical of “superintelligence,” think instead of cheap, abundant, reliable, scalable intelligence—a transformation analogous to what we went through with energy and physical work in the Industrial Revolution. In such a world, we’ll be able to “waste” enormous amounts of intelligence on minor tasks, just as today we “waste” enormous amounts of energy and machinery and raw materials to produce disposable containers for food or plastic trinkets for children. We’ll use “overqualified” AI for mundane activities, such that average people will be able to enjoy the sort of first-class service previously available only to the very wealthy. When you want a nutritionist, interior decorator, investment advisor, or math tutor, you’ll hire the equivalent of a world-class expert, for an affordable price; when talking to sales or customer service, it will feel as if you got escalated to the CEO for special handling.

To extend the Industrial Revolution analogy: A human laborer exerts about 100 watts of muscle energy.16 US per-capita energy consumption is roughly 10 kilowatts, or about 100x a human—the energy equivalent of 100 servants performing manual labor night and day on behalf of every man, woman and child.17 To imagine the intelligence age, add to this 100 assistants performing cognitive labor for every human—managing our affairs, prototyping our ideas, prioritizing our correspondence, researching our questions. A human brain has been estimated at about a petaflop/s of computational power.18 Current installed computing power from NVIDIA chips alone has been estimated at 3.9 million petaflop/s as of Q4 2024, and doubling every ten months.19 At that rate, the US will reach the 100-to-1 ratio in roughly 2037.20

The transition, however, will be more complex than simply scaling up data centers. As Dario Amodei has suggested, AI’s ability to drive productivity will be limited by the need for data, the slowness of the physical world, the intrinsic complexity of certain problems, and social constraints. Not all tasks have the same “marginal returns to intelligence.”21

Given these limitations, how fast might the transition be? Agriculture took thousands of years to spread: it advanced at about one kilometer per year.22 Industry took centuries: the steam engine was invented in the early 1700s, but horses were still the main way to plow fields or pull carriages until the early 1900s.23 Any transition from one age to the next begins in the earlier age, using the technologies of that age, including the technologies that drive the transition. So for now, the AI transition is happening at the speed of humans: humans still must adapt AI to each new function, and must start to reimagine our processes and organizations with AI in mind. Thus to reach the point where AI has transformed most sectors of the economy will probably take decades.

A new economic mode can change the crucial factors of production. In the agricultural age, land was a limiting factor. In the industrial age, production was driven by labor and capital, and no longer limited by land. In the intelligence age, perhaps production will be driven by capital alone, no longer limited by labor. In economic models, if you remove labor from the production function, then output becomes simply “AK”, that is, TFP times capital. Such a model grows unconstrained by population or any biological limits. The pattern of acceleration then continues unless and until we hit the best technology allowed by the laws of physics, the greatest possible TFP. At that point there is no more R&D left, and acceleration (increasing growth rate) stops, but the economy still grows exponentially (at a constant growth rate) as long as it can accumulate capital. As Chad Jones puts it, “this result sounds like the plot of a science fiction novel: Machines invent all possible ideas, leading to a maximum productivity, and then they fill the universe rearranging matter and energy to exponentially expand the size of the artificial intelligence.”24

A fourth age would thus mean the continued acceleration of economic growth, quickly leading to wealth beyond our wildest dreams. But would it be a utopia—or a dystopia?

Work is not merely toil. It is a source of meaning, identity, and power. If AI does all the work, will we lose those things? Even if not, will we simply be excruciatingly bored? What will people do in the intelligence age?

One dystopian vision of the future is the world of WALL-E, where the humans are fat, lazy, stupid, and complacent, while the AI nannies them, distracts them, and hides the truth about their situation. Put another way, the grand potential of AI is that it magnifies human agency—but the risk is that we instead cede agency to AI: letting it think for us, decide for us, live for us. This risk was articulated in a paper titled “Gradual Disempowerment,” which worries that humans may become “unable to meaningfully command resources or influence outcomes” in the world, to the point where “even basic self-preservation and sustenance may become unfeasible.”25

But if we get AI right, it will instead lead to accelerating empowerment. It will enable people to learn any subject, start any business, and realize any vision, limited only by motivation and will. It will greatly leverage human creativity and judgement—by making it cheaper, faster, and more reliable to bring ideas into reality. If you think it would be amazing to see Sherlock Holmes set in medieval Japan, or Beowulf done as a Hamilton-style hip hop musical, AI will help you create it. If you think someone should really write a history of the catalytic converter in prose worthy of The New Yorker, AI will draft it. If you think there’s a market for a new social media app where all posts are in iambic pentameter, AI will design and code the beta. If you want a kitchen gadget that combines a corkscrew with a lemon zester, AI will create the CAD files, and you can send them to a lights-out factory to deliver a prototype.26

With AI to assist in every task, humans will step up a level, into management. A software engineer becomes a tech lead of a virtual team. A writer becomes an editor of a staff of virtual journalists. A researcher becomes the head of a lab of virtual scientists. Lawyers, accountants, and other professionals spend their time overseeing, directing, and correcting work rather than doing the first draft.

What happens when the AI is good enough to be the tech lead, the editor, the lab head? A few steps up the management hierarchy is the CEO. AI will empower many more people to start businesses.

Most people today aren’t suited to being CEOs, but the job of CEO will become much more accessible, because it will require less skill. You won’t have to recruit candidates or evaluate them; you won’t have to motivate or inspire them; you won’t have to train junior employees; you’ll never catch them slacking off; you’ll never have to work around the vacations or sick days that they won’t be taking; you’ll never have to deal with low morale or someone who is sore they didn’t get a promotion; you’ll never have to mediate disputes among them or defuse office politics; you’ll never have to give them performance reviews or negotiate raises; and you’ll never have to replace them, because they’ll never quit. They’ll just work competently, diligently, and conscientiously, doing whatever you ask. They’ll be every manager’s dream employee. Running a team of virtual agents will make managing humans look like herding cats.

AI employees will also be cheap, which means that the capital requirements of many new businesses will be much lower—and with tons of surplus wealth being tossed off by the increasingly automated economy, starting up will become much easier. Many businesses will be started that seem non-viable today, addressing niche markets that can’t support a human team, but can support an AI team. An even longer tail of projects will be possible that don’t even rise to the level of businesses: projects that today cost millions, such as movies or apps, will be done by individuals on the side using their spare time and cash. When any idea you have can be made real, the qualities that will be at a premium are taste, judgment, vision, and courage.

So, what will people do in the intelligence age? Anything we want.

What happens when the AI is even good enough to be the CEO? There is a level of management above the CEO: governance. The board of directors. Even if and when humans no longer have to work, we will still be owners, and our role will be to formulate our goals, communicate them, and evaluate if they’re being achieved. Humanity will be the board of directors for the economy and the world.

But we must actually be managing or governing—not just along for the ride. We must cast ourselves in the role of decision-makers, with AIs as expert advisors, and we should take responsibility for ultimate decisions, as good managers do. We should question and push back on AIs, making them justify their advice. We should verify facts for ourselves, diving deep into details. We should constantly evaluate our AIs, and when necessary fire them and replace them with different models.

We should require periodic reports and reviews of AIs acting autonomously, and subject them to regular audits. We should empower AI agents to be whistleblowers when given a dangerous or unethical task, whether by a human or by a manager AI. And ultimately, we will rely on law enforcement to stop rogue actors—whether humans using AI, or AIs acting alone.

One concern is that it will become impossible to manage the enormous throughput of AI activity, the accelerated pace of change, and the growing complexity of the world. “Gradual Disempowerment” worries that “AI labor will likely occur on a scale that is far too fast, large and complex for humans to oversee,” that “the complexity of AI-driven economic systems might exceed human comprehension,” that “AI decision-making processes might be too complex for meaningful human review,” and that “the legal and regulatory framework might evolve to become not just complex but incomprehensible to humans.” In this, the paper echoes Alvin Toffler’s worries from 1965 that we noted in Chapter 2: “Change is avalanching down upon our heads … Such massive changes, coming with increasing velocity, will disorient, bewilder, and crush many people.”27 But as I argued in that chapter, technology not only accelerates change, it also helps us deal with change. And AI is perfectly suited to helping us deal with the changes accelerated by AI.

AI can help us gather and digest information, monitor events and situations, make predictions, model threats, and run scenarios. It can help us comprehend and navigate the legal system—indeed, it will democratize this ability, since the legal system is already incomprehensible and unnavigable unless you are a trained professional or wealthy enough to hire one.

AIs can provide checks and balances on other AIs. If one AI system writes code, another can run a suite of tests. If one creates an engineering design, another can simulate it to find flaws. If one produces a report, another can check its calculations, verify that its references support its statements, compare its claims to historical data, or search for contradictory claims in the literature. Multiple AIs can be tasked with answering the same question, or one AI can propose a plan and another can critique it—and then they can all debate their answers, in front of a panel of AI judges. All of this will be quick and cheap, which means we will do much more of it, even in situations where it doesn’t seem worth it today to go to the trouble—say, automatically verifying every reference in every published paper or book.

An implication of this vision of the future is that we will need AI model diversity. The more we rely on AIs to provide checks and balances on other AIs, the more there is a risk of collusion, which would be magnified if all AIs are variations on the same model. An AI may decide not to blow the whistle on a copy of itself, or to fail a copy of itself in an audit. In such a world, a single dominant model becomes a single point of failure.

A future in which AI magnifies human agency is not automatic or inevitable. We must build for agency. In education, for instance, AI can either help or hurt learning, depending on how it is used. Wharton professor Ethan Mollick reports that when students use ChatGPT to do homework or write essays without guidance or special prompting, the AI ends up doing the work for them; as a result, they learn less and score lower on exams. But “when used with teacher guidance and good prompting based on sound pedagogical principles, AI can greatly improve learning outcomes,” as has been found in a variety of classroom studies from Harvard and Stanford to Malaysia and Nigeria. The problem is that “even honest attempts to use AI for help can backfire because the default mode of AI is to do the work for you, not with you.”28

So those who create AI systems, and who integrate them into the economy and society, must design them for humans who will be active learners, managers and governors, not passive followers or consumers. Otherwise, we may well be disempowered—gradually, or suddenly.

Stepping up to this challenge is worth it. The reward is a new golden age.

It may feel surreal to think that we will live through a transition to a new age of humanity, on the level of the first agricultural revolution, or the Industrial Revolution. What is even more surreal is to think that we may live through more than one.

The stone age lasted several tens of thousands of years (or even longer, depending on how you count).29 The agricultural age lasted about ten thousand years. The industrial age, so far, has lasted less than three hundred years. Each age runs its course faster than the last—dramatically faster.30 Will the length of the intelligence age be measured in decades? And what could possibly come after it?

In Chapter 6, we saw that the growth rate of world GDP is not constant, but increases over time. The simplest model that fits the data is that growth rate is proportional to the size of the economy itself. But where a constant growth rate would produce an exponential growth curve, a growth rate that increases with the size of the economy produces a hyperbolic growth curve—that is, a curve with an asymptote, that goes infinite in finite time.31

Infinite growth is impossible, so at some point this model has to fall apart. One of our assumptions has to break. There must be some maximum level of technology, past which growth can’t accelerate; or there are limits to capital based on the amount of matter and energy in the galaxy; or some other limit we can’t even conceive of now. But the acceleration may not stop until we hit these ultimate limits.

To be continued in Chapter 8, Section 2.

Parts of this essay were adapted from “The future of humanity is in management.”

1: Jovanovic and Rousseau, “General Purpose Technologies.”

2: “The Breakthroughs Needed for AGI Have Already Been Made.”

3: Amodei, “Machines of Loving Grace.”

4: Altman, “The Intelligence Age.”

5: “Better Language Models and Their Implications.”

6: Brown et al., “Language Models are Few-Shot Learners.”

7: “GPT-4”; Katz, et al., “GPT-4 Passes the Bar Exam.”

8: Brown, “Codeforces Rating Over Time.” For Codeforces’ level naming, see EbTech, “How to Interpret Contest Ratings.”

9: Kwa, et al., “Measuring AI Ability to Complete Long Tasks.” The caption on the figure below says: “The length of tasks (measured by how long they take human professionals) that generalist autonomous frontier model agents can complete with 50% reliability has been doubling approximately every 7 months for the last 6 years…. The shaded region represents 95% CI calculated by hierarchical bootstrap over task families, tasks, and task attempts.” If an 80% reliability standard is chosen instead, the task length is reduced by a factor of 5, but the doubling trend is the same.

10: Tong and Cai, “OpenAI, Google and xAI Battle for Superstar AI Talent”; “Announcing the Stargate Project”; OWID, “Annual Private Investment in Artificial Intelligence.”

11: Morrow, “AI is hitting a wall just as the hype around it reaches the stratosphere.”

12: One cogent essay argues that biology is so complex that realizing the potential for biotech will require “discarding the reductionist, human-legibility-centric research ethos underlying current biomedical research … in favor of a purely control-centric ethos based on machine learning” (“A Future History of Biomedical Progress”).

13: Brixi, et al., “Genome Modeling and Design Across All Domains of Life”; Adduri et al., “Predicting Cellular Responses to Perturbation Across Diverse Contexts with State”; Kimmel, “January//February 2025 Progress Update”; Ghareeb et al., “Robin: A Multi-Agent System for Automating Scientific Discovery.”

14: Steinhardt, “What Will GPT-2030 Look Like?”

15: Dyer, Edison, His Life and Inventions, Vol 1, 262, and Vol 2, 605; Ribeiro da Cunha et al., “Antibiotic Discovery: Where Have We Come From, Where Do We Go?”; Hager, Alchemy of Air, 111.

16: Smil, Energy in Nature and Society, 179.

17: I heard this comparison via Alex Epstein, but the concept goes back at least to Buckminster Fuller in the 1940s: Marks, The Dymaxion World of Buckminster Fuller, 52–3.

18: Carlsmith, “How Much Computational Power Does It Take to Match the Human Brain?”

19: “The stock of computing power from NVIDIA chips is doubling every 10 months.”

20: Assuming the US is ~40% of total AI compute usage. This doesn’t include other types of chips, such as Google TPUs, for which there is less data available on the installed base; adding that additional compute would only bring the date in closer.

21: Amodei, “Machines of Loving Grace.”

22: Mazoyer and Roudart, A History of World Agriculture, 89.

23: Crawford, “Big Tech Transitions are Slow.”

24: Jones, “The Past and Future of Economic Growth.”

25: Kulveit et al., “Gradual Disempowerment.”

26: Although I was attempting to be original with these ideas, it turns out that Sherlock in Japan and hip-hop Beowulf have already been done (Donoghue, “Sherlock Holmes: A Scandal in Japan by Keisuke Matsuoka”; Salzburg, “Old English meets “Hamilton” in a new “Beowulf” translation.”) The iambic pentameter social network and the corkscrew lemon zester are evidently ideas so bad that no one has built them yet—or so non-obviously good that we’ll have to wait for AGI to try them out.

27: Toffler, “The Future as a Way of Life.”

28: Mollick, “Against ‘Brain Damage.’”

29: “Behaviorally modern humans” have existed for at least 50,000 years; “anatomically modern humans” for over 300,000; and hominins able to use stone tools for over 3,000,000: Klein, “Anatomy, Behavior, and Modern Human Origins,” Schlebusch et al., “Southern African Ancient Genomes Estimate Modern Human Divergence to 350,000 to 260,000 Years Ago,” and Encyclopaedia Britannica, “Stone Age.”

30: For an interesting curve-fitting exercise relevant to this point, see Hanson, “Long-Term Growth as a Sequence of Exponential Modes,” which fits the last two millions years of world GDP to a series of three exponential modes. “Each mode grew world product by a factor of a few hundred, and grew a hundred times faster than its predecessor.”

31: Roodman, “Modeling the Human Trajectory.”