It’s smaller than a shutterbug: a micro camera the size of a grain of salt can take sharp, full color photos — comparable to the images produced by standard cameras more than 500,000 times larger.

The secret lies in over a million “eyes,” roughly the size of an HIV virus, covering the camera, known as its metasurface.

The micro camera could be used to improve in-body and brain imaging, autonomous driving, robotics, and other fields where computer vision plays an important role.

How micro cameras work: Micro cameras using metasurfaces already exist — but their image quality is hamstrung by a little something called physics.

A regular camera uses an array of lenses to bend light into focus, creating the sharp images it captures. But the camera has to be big enough to support those lenses.

One way to shrink a camera is by using“meta-optics,” which use incredibly small nanostructures that can capture and re-emit light. Each of these little structures siphons up the photons that make light, and the metasurface then turns those photons into a signal a computer can understand, Nerdist explains. Think of them as tiny antennas.

The secret lies in over a million “eyes,” roughly the size of an HIV virus, covering the camera, known as its metasurface.

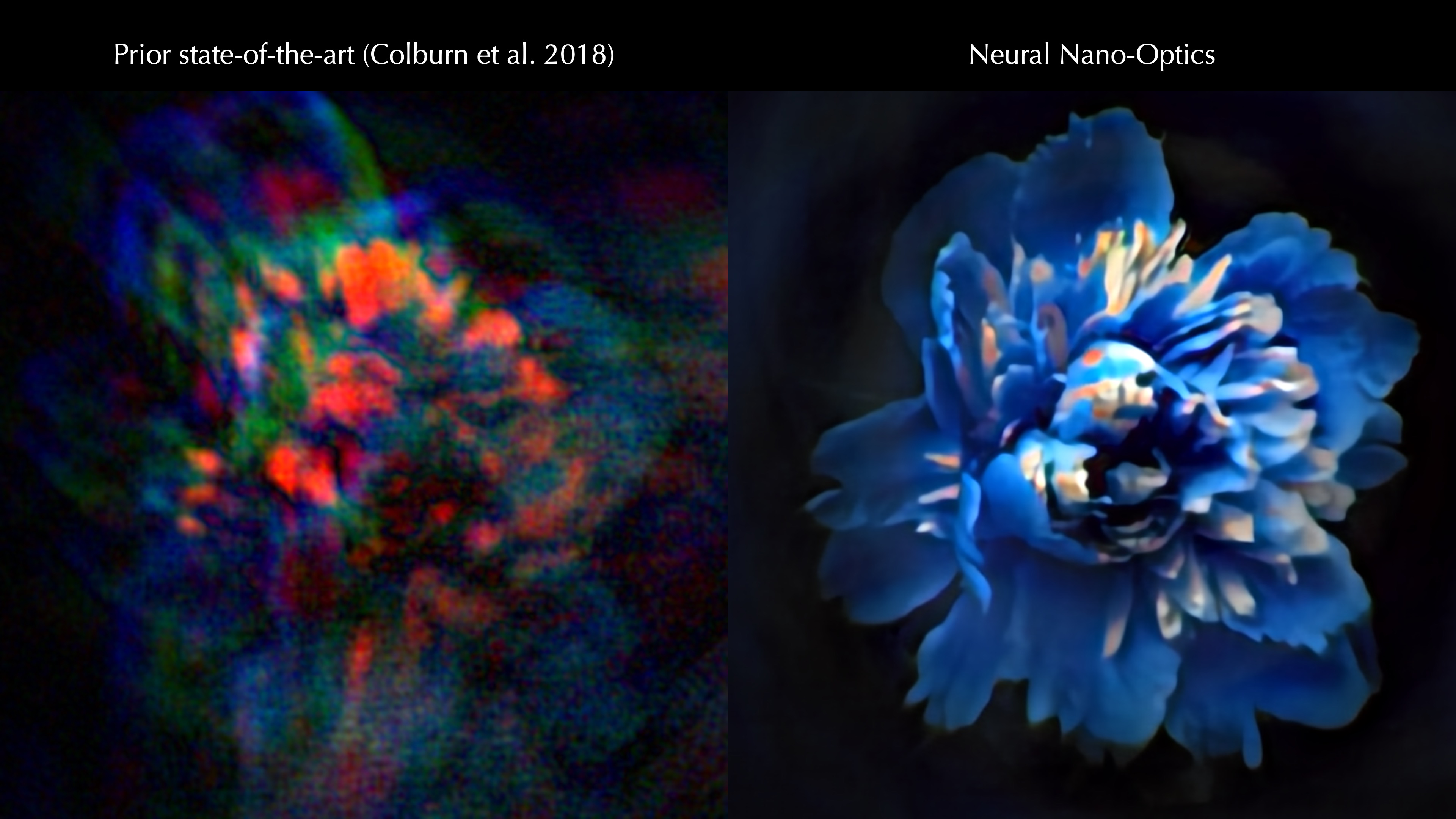

Micro cameras have previously been made using this meta-optics approach, but the results have been fairly lackluster thus far, Newsweek reports — images look like colored smears seen through cataracts, and the cameras can suffer from a narrow field of view.

For capturing large field of view images, “it’s challenging because there are millions of these little microstructures, and it’s not clear how to design them in an optimal way,” Princeton PhD student and study co-author Ethan Tseng said.

Optimal optics: To overcome the issues, the Princeton micro camera team turned to science’s Swiss army knife: machine learning. University of Washington researcher and study co-lead Shane Colburn created an AI model that could test the configurations of the tiny antennas on the micro camera metasurface. Because there are sooo many antennas and their relationship to the light they are capturing is complex, the simulation needs “massive amounts of memory and time,” Colburn said in the release.

By combining the physical properties of their micro camera with this algorithmic optimization, the team could produce images a considerable step up from the current cutting edge.

While the edges get a little blurred, a flower indeed looks like a flower; according to the researchers, the micro camera’s field of view and the high-quality photos are new high water marks.

“Although the approach to optical design is not new, this is the first system that uses a surface optical technology in the front end and neural-based processing in the back,” Joseph Mait, former chief scientist at the U.S. Army Research Laboratory, said in the release. Mait was not involved in the study.

The big picture: The researchers believe their micro camera could be dusted on a surface, making anything a camera. (Perhaps not the most comforting thought, as Nerdist points out.)

“We could turn individual surfaces into cameras that have ultra-high resolution, so you wouldn’t need three cameras on the back of your phone anymore, but the whole back of your phone would become one giant camera,” Princeton computer science professor and study senior author Felix Heide said in the release.

The micro cameras could improve brain imaging, spot problems in the body, and enhance the sensing ability of tiny robots.

More benignly, the micro cameras could help enhance current endoscopic surgical methods — where tiny tools, including a camera, perform surgeries as non invasively as possible —and open up new avenues for brain imaging.

“We can think of completely different ways to build devices in the future,” Heide said.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].