This article is an installment of Future Explored, a weekly guide to world-changing technology. You can get stories like this one straight to your inbox every Thursday morning by subscribing here.

Patient outcomes are almost always better when a disease is diagnosed and treated early, but some illnesses don’t trigger symptoms until a patient is already really sick — ovarian cancer, for example, can go undetected for 10 years or more, giving it time to spread to other organs.

By screening healthy patients for these sneaky diseases, doctors can spot them earlier — and new artificial intelligence (AI) tools promise to help in the hunt.

Cardiovascular diseases

The challenge: Cardiovascular diseases (CVDs) kill nearly 18 million people every year, making them the leading cause of death worldwide.

If a doctor knows their patient is at high-risk of developing or dying from a CVD, they can recommend medications or lifestyle changes to lower the risk, but people with these diseases often feel fine — until they have a heart attack or stroke.

The status quo: Doctors can predict CVD risk using tools such as the Framingham Risk Scores framework, but one of the inputs required is cholesterol level. That means patients have to go to a clinic to have blood drawn — nobody loves that, and around a quarter of adults admit they’re afraid of needles.

Previous research suggests that the width of the veins and arteries in the retina at the back of the eye can serve as an early indicator of CVD, but experts must analyze the images, meaning there isn’t a simple way to use the information to help a lot of patients.

Cardiovascular diseases are the leading cause of death worldwide.

The AI: For a newly published study, UK researchers trained an AI to predict a patient’s risk of developing CVD based on retinal images and risk factors such as age, tobacco use, and medical history — no new blood work required.

To validate their AI-based model — QUARTZ, or “QUantitative Analysis of Retinal vessels Topology and siZe” — they predicted the CVD risk of nearly 6,000 people (average age 67) involved in a UK study tracking long-term health.

The researchers followed the participants for an average of 9.1 years and found that their AI was able to predict CVD risk about as accurately as the widely used Framingham framework.

Looking ahead: The participants in the UK were predominantly white and with lifestyles healthier than average for their location and age, so the AI still needs to be validated on a wider population.

And, while retinal imaging is common and low cost, it does require specialized equipment, typically operated by an eye doctor. Cardiologists and GPs will need to figure out the best way to bring this kind of imaging into their workflows.

However, research into the use of smartphone cameras for retinal imaging could streamline the process — this new AI could one day be incorporated into an app that lets people assess their CVD risk without undergoing any invasive testing or even leaving their homes.

Breast cancer

The challenge: Breast cancer is the most common cancer in the world, but thanks to effective treatments, it’s not one of the most deadly — while survival rates vary by location, 90% of patients in high-income nations survive 5 or more years after diagnosis.

Early detection is key to those positive outcomes. If the cancer is detected while still localized to the breast, the 5-year survival rate in the US is 99%, but if it has already spread to distant parts of the body, such as the lungs or liver, the rate drops to just 29%.

The status quo: If a person is at above-average risk of developing breast cancer, their doctor might recommend that they get a breast MRI, along with regular mammograms (the standard screening tool for breast cancer).

Because MRIs are more sensitive, they can pick up on suspicious lesions that a mammogram might miss. This sensitivity also leads to a lot of false positives, which are usually only identified after the patient undergoes an invasive tissue biopsy.

The AI could have reduce unnecessary biopsies for up to 20% of patients.

The AI: Researchers at NYU and Jagiellonian University in Poland used 20,000 breast MRI scans to train an AI to predict a patient’s likelihood of having breast cancer based on the images.

Once it was trained, the AI was able to predict cancer from MRI scans with about the same accuracy as a panel of five human radiologists.

When the researchers tasked the AI with analyzing scans of BI-RADS category 4 lesions — ones deemed suspicious enough that biopsies should be considered — they found that its recommendations would have reduced unnecessary biopsies for up to 20% of patients.

Looking ahead: White patients accounted for nearly 70% of the MRI scans used to train the AI and for most of those used to test it, so more research is needed to ensure it’s as accurate at predicting breast cancer probability in scans from non-white patients.

If those studies find the AI is effective across the population, it has the potential not only to reduce unnecessary biopsies, but also help more breast cancer patients get diagnosed.

That’s because the high rate of false positives is a major reason MRI scans aren’t recommended for the general population — if an AI can reduce that rate, the scans could become a more common screening tool, helping more patients achieve earlier diagnoses.

Colorectal cancer

The challenge: Colorectal cancer is the third most common cancer globally and the second most deadly — only lung cancer kills more people.

It starts with the formation of tiny growths, called polyps, on the inner lining of the colon or rectum. Polyps that can become cancerous over time are called adenomas, and if they aren’t removed, the cancer can spread beyond the bowel.

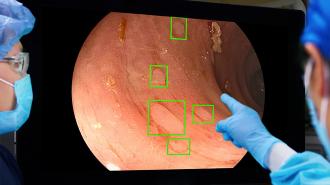

The status quo: The most sensitive screening test for colorectal cancer is a colonoscopy, a procedure in which a doctor inserts a camera on a thin, flexible tube into the bowel so that they can scan it for polyps.

Doctors can often remove any polyps they spot during the colonoscopy, but an estimated 25% of the growths are missed during the procedure — and a tiny missed polyp can turn into a big problem by the time a person undergoes another screening.

Polyps were missed in half as many patients when the AI was used during the initial colonoscopy.

The AI: Health tech company Medtronic used images of 13 million polyps to train an AI called GI Genius to spot them in a colonoscopy camera’s feed — in real time.

They tested the AI by having 230 patients in the US, UK, and Italy undergo two colonoscopies in a single day: in one colonoscopy, the doctor worked alone, and during the other, the AI helped them spot polyps.

When the AI-assisted colonoscopy was first, the doctor working alone spotted missed polyps in only 15.5% of patients during the second colonoscopy. When the unassisted doctor went first, though, polyps were found in 32.4% patients during the AI-assisted procedure.

Looking ahead: Unlike the previously mentioned AIs, GI Genius isn’t going to save anyone from undergoing an invasive procedure — colonoscopies simply are our best line of defense against colorectal cancer — but it could, at least, help make sure those procedures are less likely to miss crucial signs of cancer.

GI Genius also differs in that it’s already being used to help patients, outside of trials — the FDA gave Medtronic permission to begin marketing the AI as a way to help detect colorectal cancer in April 2021, and it can now be found at many clinics across the US.

Medtronic has also teamed up with the American Society for Gastrointestinal Endoscopy and Amazon Web Services (AWS) to distribute 50 GI Genius systems to healthcare facilities in low-income and underserved areas, helping ensure more equitable access to the potentially life-saving AI.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].