Ask any parent, and they’ll tell you that one of — if not the — biggest challenges facing them is trying to decipher a newborn’s anguished cries in the middle of the night. Is the baby hungry? Does she have an upset stomach, or is she in the throes of colic? Maybe the poor girl needs to poop?

The only thing worse than feeling helpless in the face of such unreadable emotions is trying everything and still having no idea what’s wrong or how to ease their baby’s discomfort. Such a dilemma, mixed with a lack of quality sleep, can easily slide into feelings of inadequacy, frustration, and depression.

To help parents find peace of mind and a more restful night’s sleep, the startup Cappella has gathered a team of MIT, Berkeley, and Stanford engineers to help parents answer those inscrutable midnight questions. The team developed an app that detects baby sounds and then translates them so parents know what’s wrong. And the app runs on technology that’s already available in most U.S. homes.

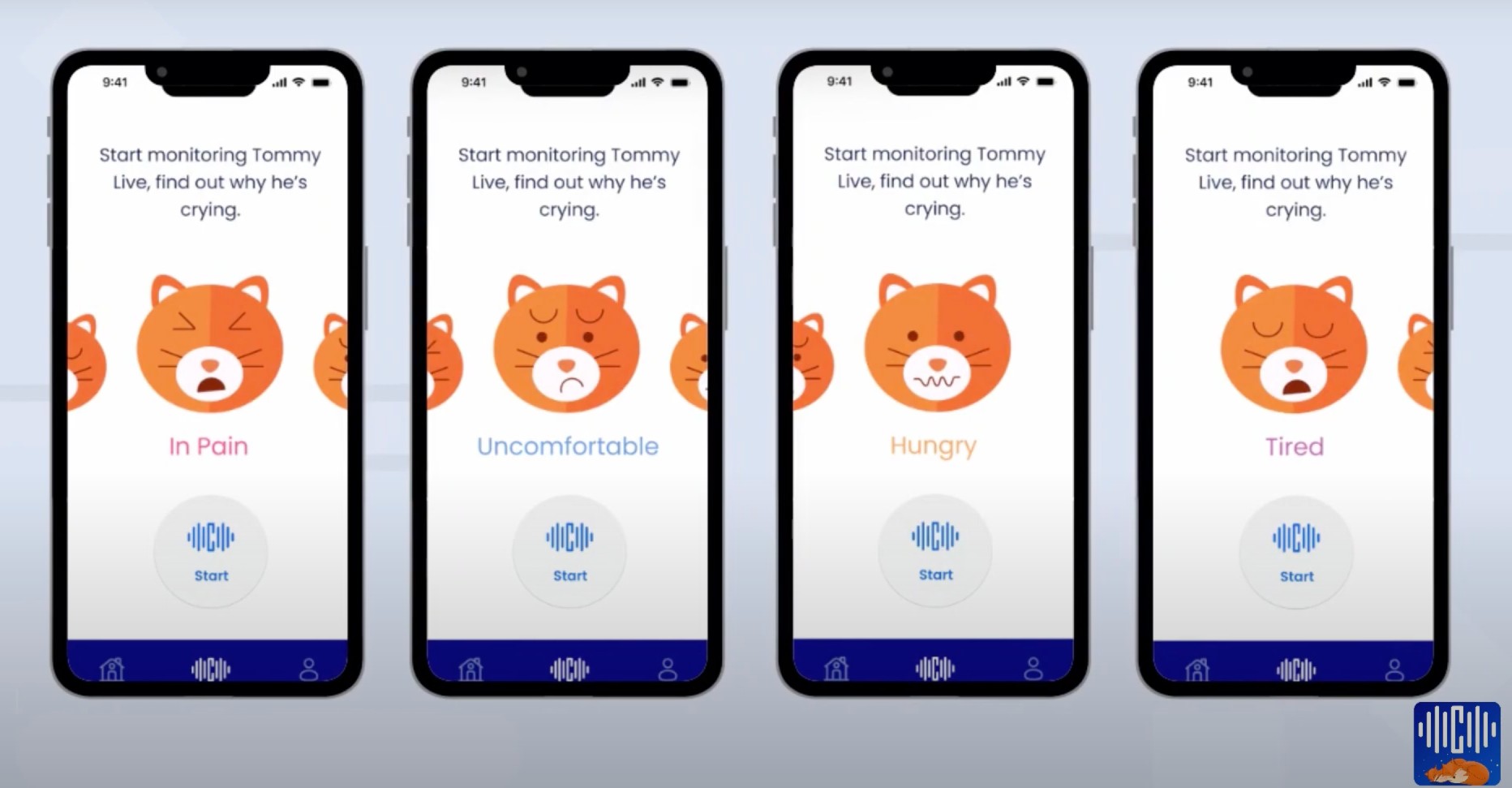

“The app basically replaces your traditional baby monitor,” Apolline Deroche, the founder and CEO of Cappella, told Freethink at CES 2024. “It does so much more as well, and the most important feature we have is that we can translate baby cries.”

The app works as a dual-phone system. One phone is left near the baby’s crib, and the other is kept by the parents. When the baby makes noise, the app uses AI to recognize the sounds to tell whether the little one is hungry, sleepy, uncomfortable, or even in pain. It then sends a notification to the parent informing them of the feeling as well as offering personalized advice to address any issues.

According to Deroche, Cappella partnered with hospitals to train the app’s machine learning model. The startup collected samples of baby cries, while doctors and nurses would label the crises based on the context, known information, and visual cues. For instance, if a nurse responds to a baby’s cry, sees the baby sucking her fingers, and knows it’s close to feeding time, then he would label the cry as hungry versus, say, gassy.

The AI model trained on huge amounts of this labeled data and then used complex mathematical formulae to classify the data. When asked what the AI is looking for specifically, Deroche noted: “There is no real rule. It’s not like we’re looking at pitch or frequency. We’re not looking at anything specifically because we’re training models that figure out how to classify the cries themselves.”

However, when it came time to test the AI with baby cries that weren’t part of the training group, it excelled. Deroche said it could decipher baby sounds more accurately than the average person and could even outperform professionals.

In addition to translating baby babble, the app also allows parents to monitor their babies through a video stream and comes with a tracking system that keeps tabs on your baby’s feeding, sleep, and diaper-changing schedule. However, Deroche pointed out that none of this information is used when identifying the nature of the baby’s cries. The AI only reads the sound, while the other features were added to aid parents and offer convenience.

“It’s everything you basically need,” Deroche says. “We’re trying to address the problem of app clutter that new parents have, where they have one app for sleep, another for feeding, [etc.].”

She added the early feedback has been positive: “The people that use our app are loving it. We’ve been getting some really great messages about how we should do this or add this.”

Cappella officially launched last month on iOS devices, and the company aims to launch on Android devices soon. Looking further ahead, Deroche wants to use this AI as a foundation for even more helpful parental tools. Next, she wants to take the app into the “clinical side of things” and find ways to translate the signs of onset medical conditions, such as respiratory disease, early. She also wants to help parents recognize behavioral disorders, such as autism, since research has shown that earlier diagnoses lead to better results.

“We can do a million more things in the future to help parents,” she concluded.