Using just open-source AIs, researchers got a commercial robot to find and move objects around a room it had never entered before. The bot isn’t perfect, but it suggests we might not be as far from sharing our homes with domestic robots as experts previously believed.

“Just completely impossible”: Demo videos of robots cleaning kitchens, making snacks, and doing other chores might have you hoping your days of loading the dishwasher are numbered, but AI experts predict we’re still a decade away from handing even a fraction of our chores over to bots.

“There is a very pervasive feeling in the [robotics] community that homes are difficult, robots are difficult, and combining homes and robots is just completely impossible,” Mahi Shafiullah, a PhD student at NYU Courant, told MIT Technology Review.

“Simply tell the robot what to pick and where to drop it in natural language, and it will do it.”

Lerrel Pinto

Open-source, off-the-shelf: A major holdup in the home robot revolution is the fact that building a robot that could work in anyone’s home is a lot harder than training one to work in a controlled lab environment.

A new study — co-led by Shafiullah and involving researchers from NYU and AI at Meta — suggests we might be closer to domestic robots than we think, though.

Using only open-source software, they modified a commercially available robot so that it could move objects around a room it had never entered before on demand. They call the system “OK-Robot,” and detail the work in a paper shared on the preprint server arXiv.

“Simply tell the robot what to pick and where to drop it in natural language, and it will do it,” tweeted Lerrel Pinto, who co-led the study along with Shafiullah.

How it works: The bot at the core of the OK-Robot system is called Stretch (you can buy one for just $19,950, plus shipping and taxes). Stretch has a wheeled base, a vertical pole, and a robotic arm that can slide up and down the pole. At the end of the arm is a gripper that allows the bot to grasp objects.

To turn the robot into something humans can talk to, the team equipped it with vision-language models (VLMs) — AIs trained to understand both images and words — as well as pre-trained navigation and grasping models.

They then created a 3D video of a room using the iPhone app Record3D and shared it with the robot — that process took about six minutes. After that, they could give the robot a text command to move an object in the room to a new location, and it would locate the object and move it.

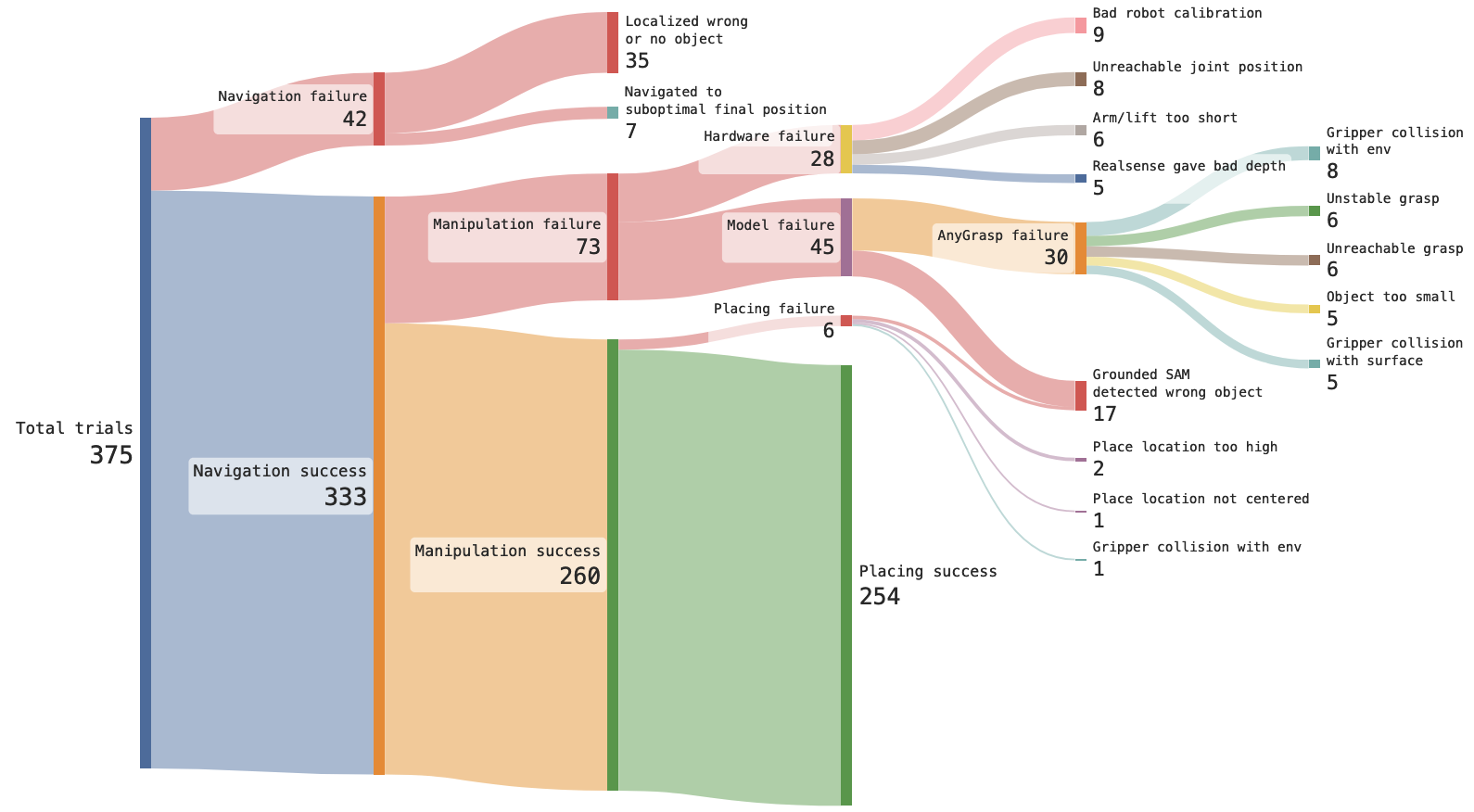

They tested OK-Robot in 10 rooms. In each room, they choose 10-20 objects that could fit in the robot’s gripper and told it to move them (one at a time) to another part of the room (“Move the soda can to the box,” “Move the Takis on the desk to the nightstand,” etc.).

Overall, the robot had a 58.5% success rate at completing the tasks. But in rooms that were less cluttered, its success rate was much higher: 82.4%.

Looking ahead: Even though OK-Robot can only do one thing (and doesn’t always do it right), the fact that it relies on off-the-shelf models and doesn’t require any special training to work in a new environment — just a video of the room — is pretty remarkable.

The next step for the team will be open sourcing their code so that others can build off of what they’ve started — and potentially help get domestic robots doing our chores sooner than predicted.

“I think once people start believing home robots are possible, a lot more work will start happening in this space,” said Shafiullah.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].