In the future, every family will have a home robot to do all their cooking and cleaning. That’s what science fiction has been promising us for decades, anyway.

But today’s Roombas are a far cry from the Jetsons’ multi-talented robot maid Rosie. So what do we need to do to get from here to there?

Where’s My Home Robot?

The key will be developing both the right hardware and software — a home robot needs a dextrous physical body to wield a mop or flip pancakes, and also the smarts to know when the floor needs cleaned and how to make the batter.

This combination is known as “embodied AI” — AI in a body — and to help advance the field, the researchers at Facebook’s AI lab, FAIR, released a virtual training ground for algorithms in 2019.

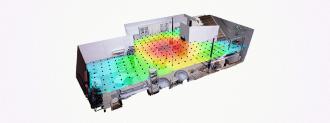

Called AI Habitat, the platform features realistic simulations of homes, offices, and other physical spaces for AIs to navigate.

In these virtual spaces, robot AIs can learn how to do things like open doors or explore unfamiliar apartments. This is much less risky than installing an untrained AI in a potentially very expensive robot and hoping it doesn’t immediately drive the bot right down a home’s stairs.

Once the AI completes its training in the virtual world, researchers can then install it in a robot body for testing in the real one.

Advances in Embodied AI

On August 21, FAIR announced a trio of new AI Habitat milestones: two new algorithms and one new tool.

The first algorithm can map a new environment while exploring it for the first time — as long as a home robot has been to your kitchen once, it will know how to get back to the room if instructed to start dinner.

This training could make an embodied AI far more useful.

The other algorithm is related to the first — it improves the ability of an AI to map a home without seeing every part of it.

An embodied AI with this ability could look in your dining room, for example, and tell that there’s extra space behind the table even without having a direct view of it. That kind of intuition would cut down on the amount of time it needed to fully map your home.

The third milestone is a new tool dubbed “SoundSpaces.” It gives researchers the ability to train an AI to respond to realistic audio within AI Habitat.

A researcher could trigger the sound of a smoke alarm in a virtual home’s kitchen, for example, and then train their AI to move toward the sound of the alarm. As the AI moves in the right direction, the sound gets louder, just like it would for a home robot.

This training could make an embodied AI far more useful — you could tell your home robot to find your ringing telephone or shut off the stove when the timer beeps.

Still, just because an AI can do something in a simulation doesn’t mean an embodied AI will be able to do it, too — even tiny discrepancies between the two environments can cause issues in translation.

But researchers have to start somewhere, and training AIs to respond to sounds in a virtual world is the first step to having a home robot that can hear your beck and call.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].