The robot revolution is already happening, and nearly every vision of the near future includes more bots in our homes, workplaces, and other spaces.

Someone has to design all these robots — and a new MIT project suggests that AI might be up to the task.

Automating Robot Design

Today’s robots all tend to have the same design based on their task, researcher Allan Zhao told MIT News — if a bot needs to be able to walk over various terrains, for example, a designer is likely to give it four legs like a dog.

That prompted Zhao and his colleagues to wonder if a creative AI might come up with a more innovative robot design that humans just haven’t thought of yet (because we’re too set on following patterns we’ve seen in nature).

To find out, they developed an AI system they call RoboGrammar.

To create a new robot design, RoboGrammar was told what parts were available (joints, wheels, etc.) and what type of terrain it needs to navigate (flat surfaces, steps, etc.).

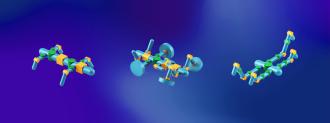

The AI then came up with hundreds of thousands of possible robot designs, all of which followed specific rules (called “grammar”) provided by the researchers — e.g., you must use joints to connect leg segments.

“It was pretty inspiring for us to see the variety of designs,” Zhao said. “It definitely shows the expressiveness of the grammar.”

The robots were placed in a simulated environment, where the goal was always the same: move forward as fast as possible.

After testing the bots navigating various terrains, it appears human robot designers — and biological evolution — have been on the right track.

“Most designs did end up being four-legged in the end,” Zhao said. “Maybe there really is something to it.”

However, the AI broke with that pattern when designing robots for flat, icy terrain. One of its creations had six legs and another had just two, which it used to pull along a dragging body, maximizing traction.

The bodies of the four-legged robots, meanwhile, tended to change depending on the terrain. A bot optimized to cross over gaps, for example, had a stiff body, while one designed to move over ridges had two joints between its front and back legs.

The Future of RoboGrammar

The MIT researchers don’t expect RoboGrammar to swipe robot design jobs from humans — rather, they see the system as being a tool that can help spark roboticists’ creativity, prompting them to consider design features that might not be immediately obvious.

Next, the researchers plan to actually build some of the designs RoboGrammar came up with to test their function in the real world, but they think the system might be useful for virtual robot design, too.

“Let’s say in a video game you wanted to generate lots of kinds of robots, without an artist having to create each one,” Zhao said. “RoboGrammar would work for that almost immediately.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].