Today, we type on keyboards and swipe on screens to communicate with our devices.

Tomorrow, we might control machines with our minds, not our hands.

The systems that could make that future possible are called brain-machine interfaces, and they’re already helping people overcome health problems, such as paralysis.

Most cyborgs must undergo invasive surgery to have electrodes implanted into their brains, though, and if we want to get to the point where anyone can tap into their tech with a thought, we’ll need a less-invasive method for recording brain activity.

Caltech researchers believe they may have found it.

Recording Brain Activity

A brain-machine interface works by turning brain activity into commands for a machine — a person thinks about moving a cursor on a computer screen, and the interface instructs the cursor to move.

Implanted electrodes can detect this by reading electrical activity between neurons — it’s an accurate method, but the electrodes themselves damage brain tissue.

There are non-invasive ways to measure this same electrical activity, such as electrode-covered caps, but those are less accurate. MRIs, meanwhile, are more accurate but expensive and bulky — you’d never be able to use them to control your smartphone with your mind.

Enter: functional ultrasound.

This study will put ultrasound on the map as a brain-machine interface technique.

Krishna Shenoy

Because sound travels at different speeds through different substances, ultrasound systems can produce images by emitting pulses of high frequency sound and then measuring their echoes.

Functional ultrasound tech does the same thing, but it emits a flat plane of sound instead of a narrow beam, which allows it to cover a larger area more quickly.

It can also map the flow of blood — as blood cells get closer or farther away from the source of the ultrasound, the reflected sound changes its pitch.

Blood flow can be used to record brain activity — as a part of the brain becomes more active, more blood flows to it — like in fMRI, so the Caltech researchers wondered if functional ultrasound could work for brain-machine interfaces.

Ultrasound-Powered Brain-Machine Interfaces

To find out, they implanted domino-sized devices that emit ultrasound waves into the skulls of two monkeys — that procedure wasn’t very non-invasive, but it doesn’t affect the brain tissue the same way electrode implants do.

The devices were connected to a computer, where an AI could analyze the ultrasound readings.

Then the researchers had the monkeys complete two tasks.

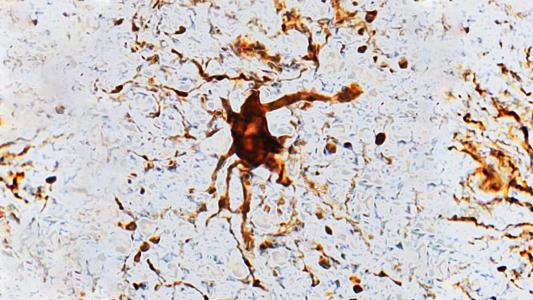

The vasculature of a monkey’s brain recorded by the ultrasound tech. Credit: Sumner Norman

First, the primates had to focus their eyes on a dot in the center of a screen. A second dot would then flash to the left or the right of that dot. When the center dot disappeared, the monkeys would look in the direction of the second dot.

For the other, they did the same thing, but instead of moving their eyes, they moved a joystick to the left or right.

By analyzing the blood flow in the monkeys’ brains, the AI was able to predict the direction of their eye movement with 78% accuracy and their hand movement with 89% accuracy — and it only needed a few seconds to make these predictions.

The researchers now plan to test their functional ultrasound technique in human volunteers who’ve already had parts of their skulls removed following previous injuries.

If it can accurately record their brain activity and predict their movements as well, functional ultrasound could play a pivotal role in the future of mind-controlled tech — providing more accuracy than completely non-invasive devices, but without subjecting people to potentially brain-damaging implantation surgeries.

“This study will put (ultrasound) on the map as a brain-machine interface technique,” Krishna Shenoy, a Stanford University neuroscientist who was not involved in the study, told Science Magazine. “Adding this to the toolkit is spectacular.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].