Fake faces created by artificial intelligence (AI) are considered more trustworthy than images of real people, a study has found.

The results highlight the need for safeguards to prevent deep fakes, which have already been used for revenge porn, fraud and propaganda, the researchers behind the report say.

Deep fake fears

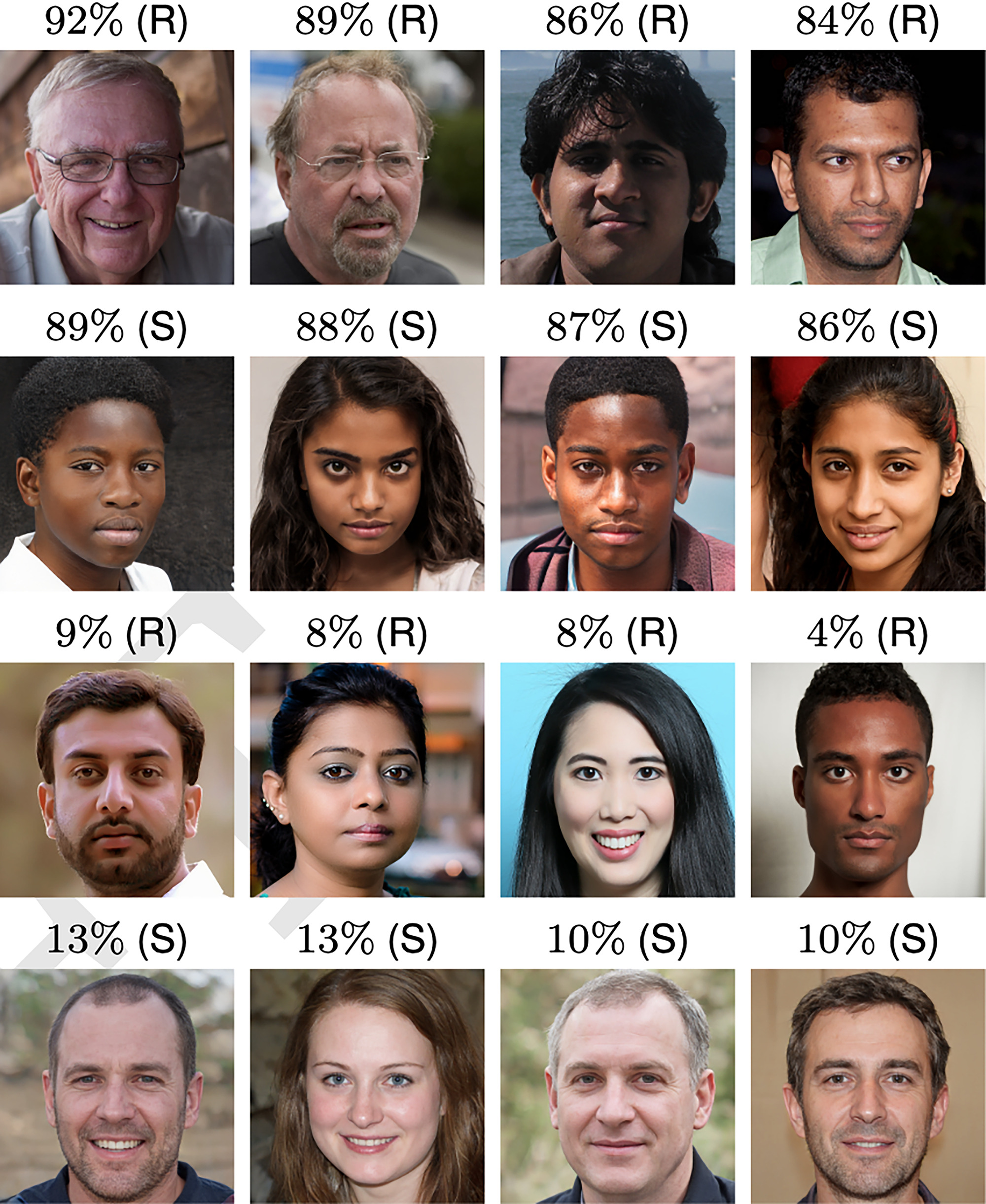

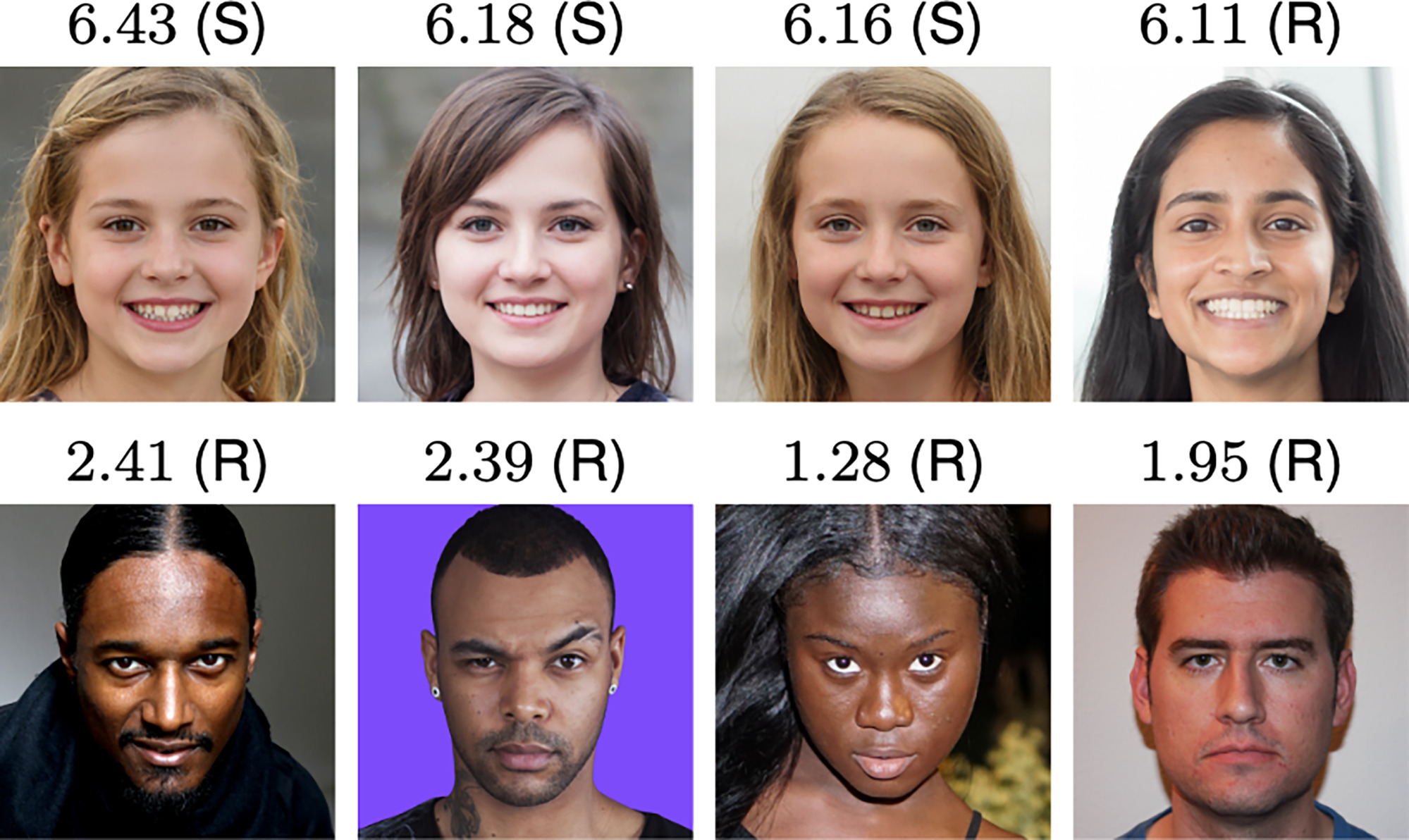

The study – by Dr Sophie Nightingale from Lancaster University in the UK and Professor Hany Farid from the University of California, Berkeley, in the US – asked participants to identify a selection of 800 faces as real or fake, and to rate their trustworthiness.

After three separate experiments, the researchers found the AI-created synthetic faces were on average rated 7.7% more trustworthy than the average rating for real faces. This is “statistically significant”, they add. The three faces rated most trustworthy were fake, while the four faces rated most untrustworthy were real, according to the magazine New Scientist.

AI learns the faces we like

The fake faces were created using generative adversarial networks (GANs), AI programmes that learn to create realistic faces through a process of trial and error.

The study, AI-synthesized faces are indistinguishable from real faces and more trustworthy, is published in the journal, Proceedings of the National Academy of Sciences of the United States of America (PNAS).

It urges safeguards to be put into place, which could include incorporating “robust watermarks” into the image to protect the public from deep fakes.

Guidelines on creating and distributing synthesized images should also incorporate “ethical guidelines for researchers, publishers, and media distributors,” the researchers say.

(Image: PNAS)

Ethical AI tools

Using AI responsibly is the “immediate challenge” facing the field of AI governance, the World Economic Forum says.

In its report, The AI Governance Journey: Development and Opportunities, the Forum says AI has been vital in progressing areas like innovation, environmental sustainability and the fight against COVID-19. But the technology is also “challenging us with new and complex ethical issues” and “racing ahead of our ability to govern it”.

The report looks at a range of practices, tools and systems for building and using AI.

These include labelling and certification schemes; external auditing of algorithms to reduce risk; regulating AI applications, and greater collaboration between industry, government, academia and civil society to develop AI governance frameworks.

Republished with permission of the World Economic Forum under a Creative Commons license. Read the original article.