Japanese researchers have developed a robot child capable of expressing six emotions with its face — putting us one step closer to a future in which human-like companion robots help meet our need for social interaction.

Why it matters: As humans evolved, we did much better in groups than we did on our own. As a result, we’re one of the most social species on the planet — it’s literally wired into our biology to get regular facetime with other people.

“That need for social interaction is in the brain, and when you’re lonely, that’s the warning light on the dashboard coming on saying ‘get us some human company,’” James Goodwin, the Brain Health Network’s Director of Science and Research Impact, said in 2021.

If we ignore that warning light, our bodies and minds start to break down the same way our cars would — studies have found that social isolation increases a person’s risk of depression, dementia, and even premature death.

Companion robots could potentially prevent social isolation in seniors.

The challenge: While some people have robust social networks, others don’t, and seniors are particularly susceptible to social isolation — they often live alone, have experienced the loss of loved ones, and have health issues that make leaving the house difficult.

As the population ages, the number of people at risk of social isolation is likely to grow, too.

Today, about 10% of the world’s population is over the age of 65, according to the U.N., but that’s expected to grow to 16% — or one in six people — by 2050. Some countries are already much older — the elderly share in Japan is nearly 30%.

Getting a robot to look like a human is a lot harder than getting it to write human-like text

Companion robots could potentially meet the social needs of seniors and other people experiencing loneliness. Unlike robots built to carry out tasks, these bots are designed to carry on conversations, interacting with people the same way a human might.

AI developers can use transcripts from real conversations to train these robots to understand what a person is saying and respond coherently, but words are just one element of human communication — body language and facial expressions are another.

Photos and videos of people can teach robots to recognize the visual cues involved in communication, but getting a physical robot to look like a human while shooting the breeze is a lot harder than getting it to write human-like text.

When designers try to make robots look human but don’t quite nail it, the result is often disturbing — an effect called the “uncanny valley.”

A humanoid friend: Some developers have chosen to avoid the issue entirely by developing companion robots that look like robots, but others argue that it’s important for people to interact with bots that appear as humanlike as possible.

The Guardian Robot Project is one of the initiatives trying to make those humanlike robots a reality.

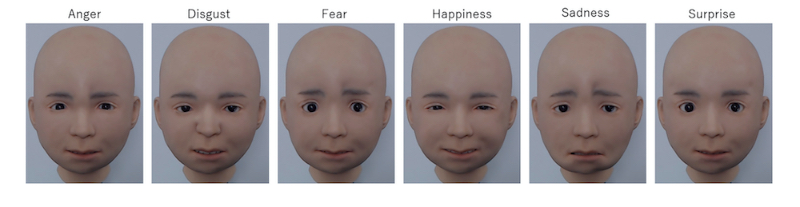

When the right actuators are triggered, Nikola look like it’s expressing one of six basic emotions.

Japanese scientific research institute RIKEN launched the project in 2019 with the goal of developing robots capable of interacting socially with people. Researchers from the project have now unveiled “Nikola,” a humanoid robot designed to look like a young boy.

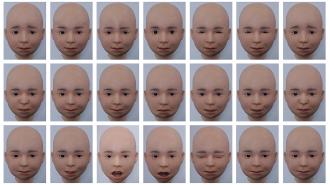

Nikola (currently, just a disembodied head) contains a system of 29 pneumatic actuators that control the movement of “muscles” under its silicone skin. Another six actuators control the movement of its eyeballs and head.

Nikola’s developers positioned these actuators using input from the Facial Action Coding System (FACS), which connects specific facial movements to human emotions — “happiness,” for example, is a combination of the “cheek raiser” and “lip corner puller” movements.

By triggering the right combination of actuators, the researchers can make Nikola look like it’s expressing one of six basic emotions: happiness, sadness, fear, anger, surprise, and disgust.

The test: Nikola isn’t the first robot to express human emotions — Hanson Robotics says its Sofia humanoid can express more than 60.

But after building Nikola, the RIKEN team had a FACS expert verify that the bot moves its face the same way a human would while expressing each of the six emotions. They also had people look at photos of Nikola to confirm that the average person could recognize each emotion.

“This is the first time that the quality of android-expressed emotion has been tested and verified for these six emotions,” according to RIKEN.

Looking ahead: While Nikola’s emotions were recognizable, the participants’ accuracy at spotting them varied.

A major issue was the fact that the robot’s skin doesn’t wrinkle very well, which makes accurately expressing something like “disgust” difficult — improving the skin material’s wrinkling would make Nikola more lifelike still.

Varying the speed with which Nikola makes different expressions could help, too.

During their study, the researchers had people look at videos of Nikola making each expression at different speeds and rate how “natural” each speed looked — they found that certain emotions were more likelike at slower speeds (e.g., sadness) and others at faster speeds (e.g., surprise).

The RIKEN team plans to use this information to continue developing Nikola with the goal of eventually building lifelike companion robots that could help meet the social and physical needs of people living alone — after getting robot bodies, of course.

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].